91618

•

Since 2002 updates, Google has made its major algorithm changes explicit. And when harsh penalties rolled out with the first Panda and Penguin updates, it became clear that the company was serious about creating an ethical environment for user search.

In fact, Google updates take place every day and come mainly unnoticed. For instance, in 2021 Google made 5,150 improvements to search.The company confirms only major algorithmic updates that are expected to affect search rankings dramatically. To help you make sense of Google's major algorithmic changes in the past years and major Google ranking factors, we've put up a cheat sheet with the most important updates and penalties rolled out in the recent years, along with a list of hazards and prevention tips for each.

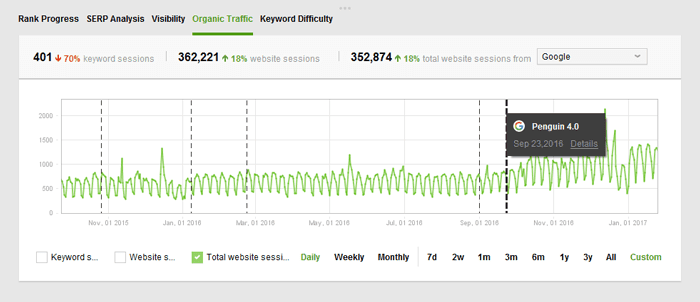

But before we start, let's have a quick check whether any given update has impacted your own site's traffic. SEO PowerSuite's Rank Tracker is a massive help in this; the tool will automatically match up dates of all major Google updates to your traffic and ranking graphs.

1) Launch Rank Tracker (if you don't have it installed, get SEO PowerSuite's free version here) and create a project for your site by entering its URL and specifying your target keywords.

2) Click the Update visits button in Rank Tracker's top menu, and enter your Google Analytics credentials to sync your account with the tool.

3) In the lower part of your Rank Tracker dashboard, switch to the Organic Traffic tab.

The dotted lines over your graph mark the dates of major Google algorithm updates. Examine the graph to see if any drops (or spikes!) in visits correlate with the updates. Hover your mouse over any of the lines to see what the update was.

Currently, the graph in Rank Tracker contains the dates of only major updates, namely Panda update, Pigeon update, Fred update, etc. But the graph is editable and allows adding those events that you deem relevant for your own tracking history. Simply right-click in the progress graph field and choose to add an event from the menu.

Did any of Google’s algorithm changes impact your organic traffic in any way? Read on to find out what each of the updates was about, what the main hazards are, and how you can keep your site safe. For convenience, the list starts with the latest updates.

Soon after the Helpful Content Update, Google proceeded with a spam update which was pretty swift, from October 19 to 21, 2022. According to Google, they have their antispam systems working ongoingly and improve them sometime, announcing major changes. The link spam update was announced globally in all languages and targeted both ends that bought or sold links. It is the first time that Google started using its AI-based spam detection system titled SpamBrain.

Google representative John Mueller several times mentioned it in Twitter comments that mostly it was not necessary to disavow spammy links because their systems were able to distinguish and nullify them. In the SpamBrain update, the link credit that was previously generated due to purchased links was expected to get lost. Thus, the update was aimed to hit mainly websites involved in link purchasing.

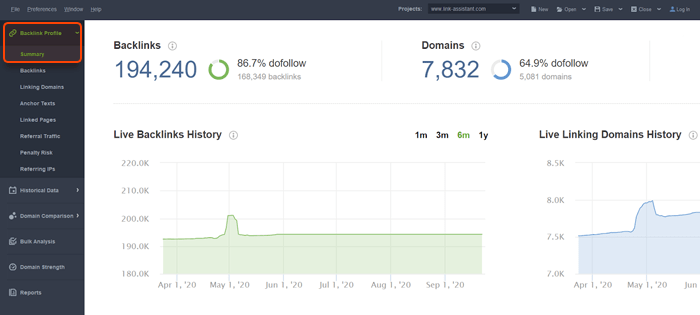

As we covered many times before with the previous spam updates, the best thing site owners can do is avoid spammy linking tactics. If a site still got hurt by spam updates, one should put effort into cleaning up link profiles from spam and invest more in building quality backlinks. And you can use the SEO SpyGlass Backlink Checker to see how bad your backlinks are.

The tool takes into account all negative signals that may imply that links were built on a PBN or link directories, such as: too many outgoing links from the same page, IP, or C-class block; keyword stuffing in anchors, etc.

The tool shows a Penalty score which signals how likely this or that link can be penalized by Google. If you find too many links that were built from buying or selling and you can't get rid of them, then you can use the disavow tool to tell Google to just ignore them.

Download SEO SpyGlass

On the other hand, you can use the backlink checker to find good domains and gain high-quality backlinks naturally. Find out more about quality link-building in the post from a practicing SEO Ann Smarty.

This update introduced a new site-wide ranking signal based on machine learning. Google systems have been set to automatically identify content that seems to have little value, low-added value, or is otherwise not helpful to searchers. The first iteration targeted English pages. Next, the Helpful Content Update was expanded globally in all languages in the successive rollout on December 5, 2022 and two weeks onwards.

The update targeted content created with the sole purpose of ranking and is considered thus to be for search engines first. Instead, Google suggests creating people-first content. The update was aimed to downrank sites with a lot of unhelpful content. The algorithm was to be applied further automatically all the time, not as a temporary penalty. If a site had some good content that exposed evidence of being helpful for searchers, the pages with that helpful content still could rank well.

Google provided a short checklist with questions to help website editors to improve the quality of their content:

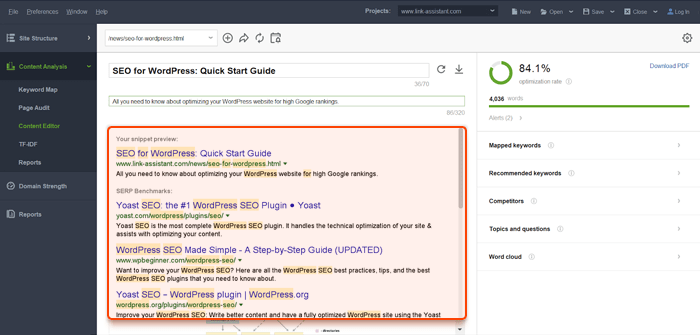

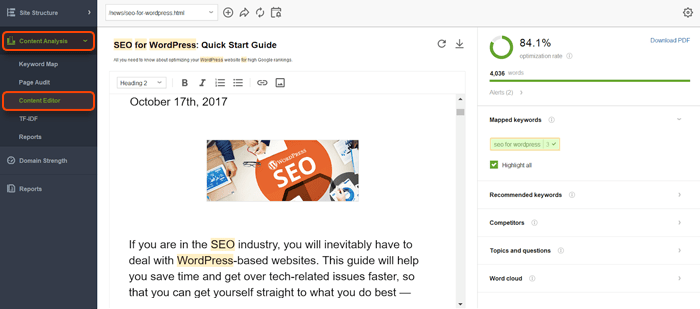

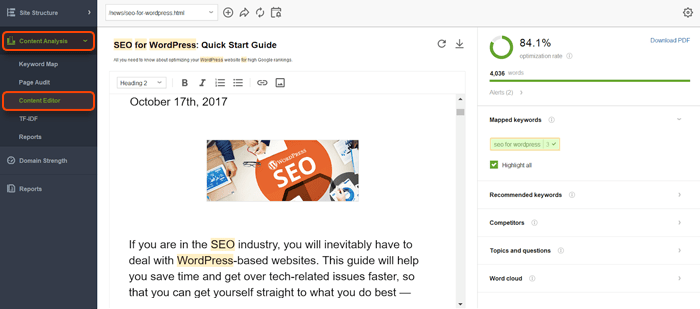

Google also stated that if a site lost rankings due to the Helpful Content Update, it would take several months to restore them. Google suggests deleting unhelpful content, yet, instead of just deleting pages, you can reverse-engineer the SERPs and update your content by creating well-written posts or landing pages for people. And you can use Content Editor in Website Auditor to create a neat copy that will be SEO-optimized and written for humans at the same time.

Download WebSite Auditor

Content Editor analyzes the top 10 ranking pages on the SERP for your keyword and observes that:

You can edit your texts on the fly in-app or just download the recommendations as a task to work with in other content writing tools.

Keep in mind that in the meantime, Google updated its Search Quality Raters Guidelines in several iterations, which does not have a direct link to rankings, but helps to understand how Google sees quality content. The document was updated in several steps, with the latest taken in December: Google added Experience as a necessary criteria to be considered within a site's E-A-T profile, which have become now E-E-A-T. So, another important step is to work on the authority of your website and provide more information regarding to its relevance and competences in your niche.

Here, Google keeps their own chronology of all ranking and core updates.

On June 3, Google reported about an algorithmic adjustment concerning title links. If the search engine finds a mismatch between the language of the title and the main content on the page, it may generate a title link that matches better the primary content.

Google suggests sticking to the best practices of implementing title links. Note that you can review all your existing title tags, title lengths, as well as multiple title tags in WebSite Auditor's Site Structure > All pages section.

The May 2022 Core Update affected all types of content in all languages, but particularly hit websites with autogenerated or thin content.

May 22 Core Update is said to have been one of the largest since 2021 and affected websites in all locations and languages. SEO experts noticed that the update hit hard on sites with autogenerated and thin content that had lost around 80-90 per cent of their traffic.

Another type of websites affected by this core update were sites having redirects. In the first week of the update, they even saw a positive ranking, but then strated to lose their traffic.

Another effect from this update was noticed on smaller websites with good internal linking: if they did not have that much authority as to be in top positions, they benefitted a bit after the update if they had done proper contextual internal linking.

An interesting impact of the May 2022 Core Update was a clean-up in knowledge panels and featured snippets. It looks like it's going to be harder and harder to get into featured snippets. Is there a way out? Improve yor website's EAT signals. As Google reiterates its mission to "make search results more helpful and useful for anyone", there is nothing else left to do as to abide by Webmaster guidelines and build up the authority of your websites.

In regards to May 2022 update, we suggest using WebSite Auditor to examine and improve your content.

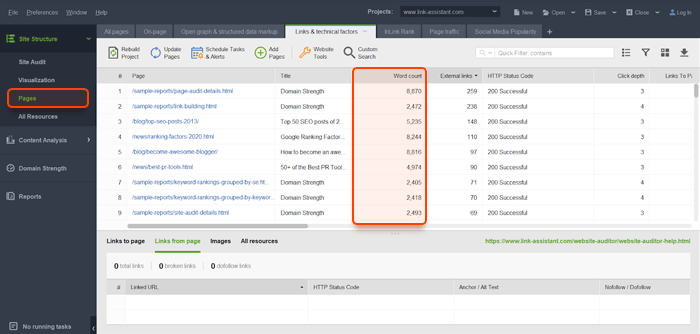

1. Run the tool to audit your site and find pages with thin content. Go to Site Structure > Pages, switch to the On-page tab and sort the pages by Word Count column.

Download WebSite Auditor

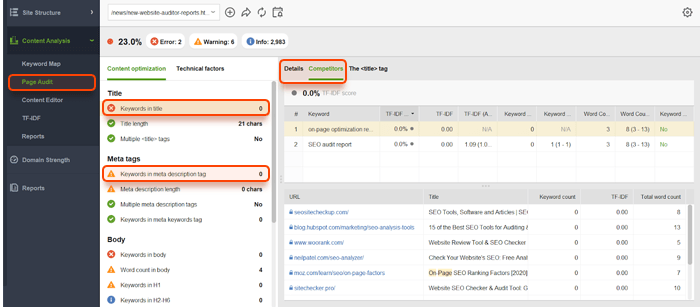

2. In the lower workspace, you can examine lists of all internal and external links page by page. Besides, in Page Audit you can examine the existing content on each page. Among all, review the list of anchors in Content Audit > Body > Keywords in Anchors.

3. Besides, you can use the Visualization tool to review the internal linking of your website.

Next, use WA's Content Editor to create content for your pages. The tool suggests topic ideas and top keywords to use, based on the analysis of top competitors from the SERP.

On March 22, 2022 Google announced the third version of the Product Reviews update, the full rollout took about three weeks, completed on around April 11. The update did not make as much impact as it was the case with the previous December product reviews update and its first phase a year ago.

On February 28, Google started rolling out the Google Page Experience update for desktops. The rollout was announced to stretch over a couple of weeks, to be completed by the end of March, but then the company reported, the rollout was concluded on March 6.

This update built on top of the page experience update Google rolled out for mobiles between June and August 2021. This ranking launch is based on the same page experience signals as for mobile devices. It covers the Core Web Vitals metrics: LCP, FID, and CLS, and their associated thresholds now apply for desktop ranking. Other aspects of page experience signals, such as HTTPS protocol and absence of intrusive interstitials, remain crucial as well. Meanwhile, the mobile-friendliness signal is not applicable on desktop URLs.

The page experience update may have impacted rankings on desktops. Meanwhile, the page experience score may be not the only factor that impacts your rankings.

There are three metrics to keep in mind:

To understand how desktop pages are performing in terms of page experience, webmasters can use the Search Console report. The Page Experience report now includes two separate dashboards. The report shows the percentage of good URLs and the number of their total impressions. The numbers are approximate, since different factors are measured within different time frames. It also links to the section with Core Web Vitals warnings and errors, indicating the number of URLs that need fixes.

You can use WebSite Auditor as well to detect pages speed issues for both desktop and mobile devices. Let WebSite Auditor scan your site and find technical SEO issues regarding page speed and mobile friendliness. Alternatively, you can grant access to the PageSpeed API and get all the data straight from Google. You will have all URLs with critical issues (for each issue individually) listed in the Site Audit report.

Also, you can check page speed for each separate URL in the Page Audit > Technical Audit report. There you will see the page speed score and the list of the diagnozed issues, which you can copy/export and send to your developers for fixes.

Download WebSite Auditor

The update concerns companies with a physical location who need visibility in Google Maps and the local pack.

On December 16, Google reported concluding a local search update which started to roll out on November 30 and ran through December 8. The local search update has been nicknamed the Vicinity Update for the impact it has made on Google Maps results: now the local search results are shown based on the listing's proximity to the searcher query. The update involved a rebalancing of various factors they consider in generating local search results. Google referred to the general guidance for businesses to improve their local search presence, according to which results are rankied according to three main criteria:

To meet the requirements, make sure you've fulfilled the following recommendations:

For more information, check out our guide to Local SEO Ranking Factors in 2021.

On December 1, 2021, Google reported rolling out another major update since April related to product reviews in English. The rollout is promised to take place within three weeks and may impact the way product reviews rank in search engines.

The update creates concerns for sites with numerous low-quality thin-content reviews.

Google suggests sticking to the best practices and writing high-quality content that proves the authenticity of the reviews. In addition to the guidelines on writing reviews that were published in April, there are a couple more tips:

Add supporting visuals, audio, or other links that prove the reviewer's experience in using the product;

Google announced a broad core update to roll out from November 17 and two weeks onward. The SEO community was a bit stunned by meeting a core update just on the eve of Thanksgiving and Black Friday. Probably, stakes were not that high because experts had noticed SERP volatility in favor of some big retailers. Meanwhile, this core update gave a boost to reference materials like dictionaries, Wikipedia, some popular directories, law and government resources. However, there was a decline in the visibility of news websites. A noticeable shakedown was registered for the health category. Take notice that these are the stats measured while the update was still rolling out. Google kept pretty laconic about this update, referencing as usual to its guidelines for webmasters.

Launched: November 3, 2021

Rollouts: Until November 11

Goal: Hit spam content

Since June 2021, Google has implemented a series of counter-spam updates. The first in the series was Google's response to New York Times articles documenting how the slander industry preys on victims of online slander. Google has even created a new concept it called “known victims.” According to NYT, the search giant was changing its algorithm to prevent slanderous websites from appearing in the list of results when someone searches for a person’s name. This has been a part of a major shift in how Google demotes harmful content.

This November, Google confirmed another spam update which started on November 3 and rolled out within a week. According to Google,

Spam updates deal with content that doesn't follow our guidelines. Core updates are simply an adjustment to how we assess content overall.

This update has made a more tangible impact on SERPs, presumably targeting cloaked content injected with links.

Cloaking is a black-hat SEO technique in which content presented to the search engine spider is different from the visible content presented to the user's browser. The aim of cloaking is to deceive search engines into displaying the page that it otherwise would not display.

1. Use SEO SpyGlass to check your backlink profile for penalty risks.

Download SEO SpyGlass

2. Avoid black-hat link-building. You probably missed our renovation of LinkAssistant aimed specifically for link-building purposes. The tool now has an upgraded link checker in-built right in there with the most popular link-building techniques highlighted as specific methods. Consult our link-building guide describing the worst and the best link-building techniques for 2021.

Download LinkAssistant

3. Observe Google Webmaster guidelines to ensure that you don't have bad linking practices in your website's link profile.

Launched: Mid-August, 2021

Rollouts: Google keeps refining it

Goal: Provide more relevant search results to user queries

On August 24, Google confirmed the page titles update with which titles in search results might be replaced by a different text from the web page. SEOs know that Google had been making minor changes to titles before, something like appending the brand name to the title. With this update, the title may be totally replaced by an H1 tag. Besides HTML tags, when generating a new title for the SERP, Google may also consider other important text on the page, as well as text anchors on the links pointing at the page.

We researched our own titles as well and found out that Google had changed titles that were too short, by appending the brand name (which coincided with the domain name). For too-long titles, it used H1 tags, which could also be cut and had the company name appended.

Besides, there are several punctuation marks to avoid in the title, namely colon, dash, and vertical bar. There are several tweaks how to speed up your on-page analysis in WebSite Auditor.

1. Find too-long titles. In Site Audit > On-page section you’ll see a warning about too-long titles.

Download WebSite Auditor

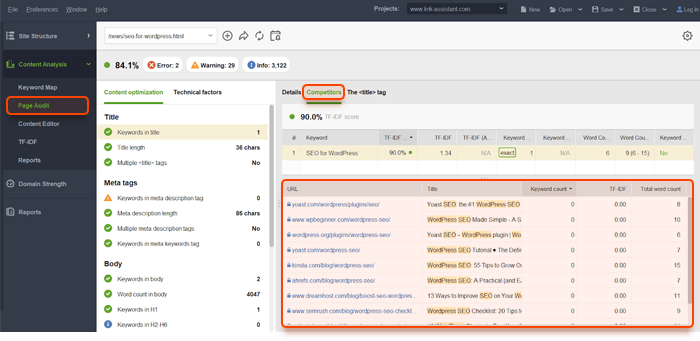

2. Rewrite titles and H1. You can also run a page-by-page audit of your pages’ titles and headlines together, and see what your competitors have for the same topic. Check your pages in Page Audit > Content Audit module.

Download WebSite Auditor

Besides, you can currently have a quick overview of all your titles and H1 headings in the Pages > All pages tab (activate the column from the Edit visible columns button and check all headings).

Download WebSite Auditor

We advise keeping your titles within a reasonable limit (for our site it was 55 – 60 characters, but the character count may be different for a different domain), not keyword-stuffed, and no explanatory punctuation in them.

Google keeps refining the algorithm and has started a feedback thread for the page titles update.

Launched: July 26, 2021

Rollouts: Two weeks onward

Goal: Improve search results and reduce the effectiveness of deceptive link building techniques

This summer is hot for updates. On July 26, Google announced a link spam update. Although Google proudly reports that over the decade the effectiveness of link schemes has decreased, search engineers still observe sites intentionally building spammy links to manipulate ranking.

The update is aimed to fight link spam and reward sites with high-quality content and good user experience. As part of their ongoing effort to fight link spam, Google may hand out both algorithmic and manual actions if a site is caught red-handed on link schemes. That is why we suggest the following steps to secure your site against link penalty.

1. Check your site's link profile for low-quality links with SpyGlass backlink checker. If you notice a massive flow of low-quality backlinks, ask the webmasters of the linking domains to take them down.

2. If the linking site owners keep silent and don't remove the harmful backlinks, use the disavow tool to tell Google not to consider these links. Disavowed links will not harm your rankings.

3. Try to use white-hat link building practices only. Consult our comprehensive guide on how to do link building SEO with SEO PowerSuite. And here are a few tips about link-building tools and tactics that are still legal and actionable.

4. Take care of your outbound links as well. If you have sponsored content or affiliate links on your site, mark them with rel="sponsored" attribute.

On June 24, Google reported on the release of the spam update within their regular work to improve search results.

According to the reports, Google constantly improves AI algorithms to remove online scam from top results. The algorithms detect 40 billion spammy pages each day. Such pages pose a threat to online security because they may install malware and steal users' private data. Google claims that over several years, it decreased the amount of automatically generated or scraped content by 80%. Among other factors, it detects and thwarts such black-SEO techniques as:

To stay on the safe side, follow webmaster guidelines to ensure the security and credibility of your pages. Use Search Console to monitor the security status. You may get a notice in the Console once someone compromises your site security.

And remember that it's easier to prevent hacking than recover from it. So use the best practices to protect your site's security.

The Page Experience update was planned to roll out gradually, to ensure that no serious unintended issues happen. The update considers several page experience signals, including the three Core Web Vitals metrics: loading speed, visual stability and interactivity.

Besides, the Top Stories carousel feature on Google Search include all news content that meets the Google News policies criteria. It means that, to appear in Google news, it won't be required any longer to have AMP pages, the same as to have the highest Core Web Vitals score. The AMP badge will also be removed from AMP content.

Google expects that the Page Experience Update will not make drastic changes to rankings. However, it introduced a new tool to safeguard sites' positions through page experience checks. The new Page Experience Report in Search Console checks the following factors:

And one more how-to from the WebSite Auditor.

Anticipating the Page Experience Update, WebSite Auditor team added the checkup feature for Core Web Vitals metrics.

Step 1. Launch the WebSite Auditor with your site project.

Step 2. Get the Page Speed Insights API, it's free and allows checking 25 000 pages a day.

Step 3. Start the task to run CWV check. Analyze this.

The Page Speed Insights report provides Lab data (ideal simulated stats how your site performs) and Field data (how users interacted with your pages over almost a month). While you enter the exact page in the Page Speed Insights tool and check page by page, in WebSite Auditor you will be able to analyze the pages in bulk, filtering all pages by values and factors.

Google reported it was a regular Core update that happens several times a year. This one is the first in a two-series update, the second part is planned to take place in July. As the 10-day update was over, there were mixed reports on SERPs fluctuation and rankings change. The second part of the update may probably reverse the impact of the June changes.

Nothing in this algorithm update was site-specific. Google referred to its regular guidance for core updates.

On April 8, 2021 Google announced sharing an improvement to its ranking systems aimed to better reward product reviews, initially for the English language. The problem behind this one update is the abundance of thin-content product reviews that bring little value to users. This improvement is expected to improve ranking of rich content product reviews.

The safeguard against the Product Reviews Update is following general quality guidelines: reviews should be based on original research and insightful analysis from experts or enthusiasts who know the topic well. Google lists several questions to help you produce a high-quality review that shouldn't be vulnerable to this ranking improvement. For example, a good review covers pros and cons of the product, provides descriptive samples, measurements, and addresses issues that influence buyer decision. Briefly, no hackwork, write in-depth and comprehensive reviews.

The Passage Ranking update was announced in November 2020 and launched on February 10, 2021 for the English laguage, with full rollouts expected shortly. Google promised that the update would affect only 7% of all queries.

The Passage Ranking was aimed to help long-form pages to rank with some parts of its content. Google did not share any specific guidlines to optimize for the update. The advice was just to keep focusing on great content. SEOs registered only slight volatility on SERPs, stating the impact was minimal.

Passage Ranking was a change in the ranking mechanism and did not introduce any new type of search result features. With this update, you may want to pay some more attention to headings and paragraphs, the semantic and structure of covering subtopics within a bigger topic. Although, the algorithm is expected to have the job well-done in any case.

On December 3, 2020 Google rolled out a broad core algorithm update, as it does several times per year. Many SEOs noticed SERPs volatility yet on December 1, though it might be unrelated. And the main question discussed in the aftermath has been: why Google rolled out such a massive update right on holiday shopping season?

The impact so far stays unclear, with various domains reporting equally many gains or losses. Google guidance about such updates remains as covered before (please check E-A-T quality guidelines).

Actually, Google adds search algorithm changes somewhat daily and officially confirms only major updates that might affect SERPs significantly (which happen several times a year). Google confirmed its major core algorithm update on January 13, 2020, and one of the latest updates rolled out on May 4.

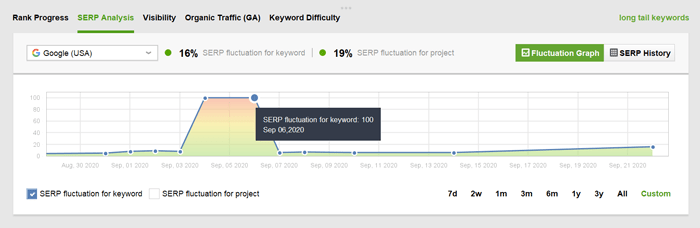

As a quick tip, you can guess that some update has taken place from the keyword fluctuation graph in Rank Tracker’s SERP Analysis.

Go to Target Keywords > Rank Tracking, click on the SERP Analysis tab in the lower screen, and switch to the Graph view. You need to click on the Record SERP data button for every project you'd like to see the SERP fluctuation graph (the feature is available only in paid version of Rank Tracker).

An average daily fluctuation is about 6.3%. Red spikes indicate that the SERP has changed significantly on that day, probably because of some algorithmic updates.

Typically, there’s no strict explanation how exactly the Google algorithm changes may affect your site. That’s why there is nothing particular to fix quickly. The Google Webmasters advised us to focus on quality and provided a checklist on how to keep your site content in line with their guidelines. Briefly, sites should provide original, up-to-date high-quality content that brings real value to users.

Our recent survey among SEO experts revealed that with every new core update, people do nothing but monitor ranking stats. And if a site got penalized, they fix traditional SEO factors, with a focus on content and links. Again, WebSite Auditor comes to your rescue explaining how to improve content and keep your site free from SEO errors.

Launched: January 22, 2020

Goal: Prevent URLs in featured snippets to appear twice on the first page of organic search results

Google rolled out this change on January 22, 2020 which caused a bit of a fuss with SEOs that’s been finally systematized here.

What used to be called a position zero in the search engine results is now the first position. It’s up to you whether you want to get into the featured snippet or want to appear somewhere among 2 to 10 results. This ranking algorithm update has occurred one-time and affected 100% of results.

It still makes sense to monitor what pages are ranking with rich snippets and for what keywords. You can use the Rank Tracker tool to record a history of SERPs and to receive an alert when your site gets into a featured snippet for a certain keyword. In the Target Keywords module go to Ranking Keywords > Ranking Progress tab, and switch to the Rank Progress > Rank History tab.

Launched: July 1, 2019

Rollouts: Onward until September 2020

Goal: As most users now search on Google from mobile devices, Googlebot primarily crawls and indexes pages with the smartphone user-agent going forward

Starting from July 1, 2019, mobile-first indexing was enabled by default for all new websites. For older or existing websites, Google continued to monitor and evaluate pages; full mobile-first indexing has been enabled since September, 2020.

Mobile-first indexing implies that most of the crawling is conducted by mobile smartphone user-agent, though, occasionally the traditional desktop Googlebot will crawl sites as well.

It means that most of the information that appears in search comes from mobile versions of sites. One of the recommended approaches is to use responsive design for newly launched websites instead of a separate mobile version. If you still choose to serve separate site versions, make sure that information is consistent in both desktop and mobile, i.e.: content, images, links are the same; meta data are the same (titles, meta descriptions, robots text); structured data are consistent.

And certainly, audit your site and fix errors, such as duplicate issues, restricted or blocked content, missing alt texts, low quality images, and page speed issues. You can check out the best practices for mobile-first indexing from Google, as well as audit your site with WebSite Auditor.

Launched: October 25, 2019

Rollouts: December 9, 2019

Goal: Helps the search engine to understand context, especially in spoken queries

BERT is a deep-learning technique created for natural language processing: the Google algorithm is set to interpret natural language and understand context based on pre-training from a body of text on the web. This technique inside the ranking algorithm was particularly needed since the spread of voice search assistants. Google named BERT as “one of the biggest leaps in the last five years”.

Google rolled out its first BERT update for the English language, and then for over 70 other languages. Earlier reports said it had affected 10% of all search queries.

Actually, there isn’t much to be worried about BERT, the safeguards are the same as those applied with Hummingbird and RankBrain. Just provide high-quality content that answers user queries in the most proper form.

The search algorithm was aimed not to get sites penalized, but rather to boost the ones that fit better user queries. Are there any tweaks here? First, use long-tail research to explore your users’ intent. Second, examine the top 10 on the SERPs in order to understand user intent for those keywords, and what your competitors do to have their pages rank in top.

Go for long-tail keywords with Related Questions research method, including People Also Ask and Question Autocomplete forms. You’ve got to research more conversational keywords. Optimize your pages so that to meet users’ quest behind those keywords as fully as possible. It is recommended to use natural language, for example, in the form of questions in a FAQ section. This raises your chances to compete for top search rankings and even a featured snippet.

Check out our latest video for beginner-friendly tips and tools.

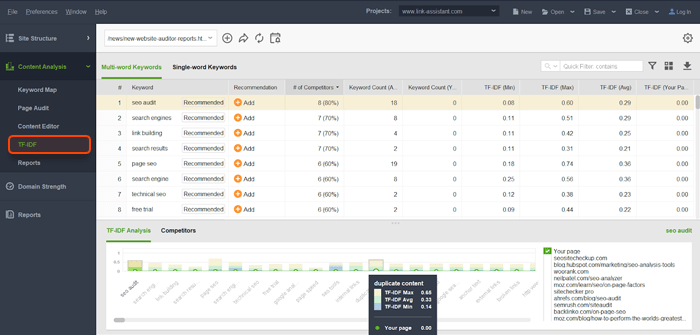

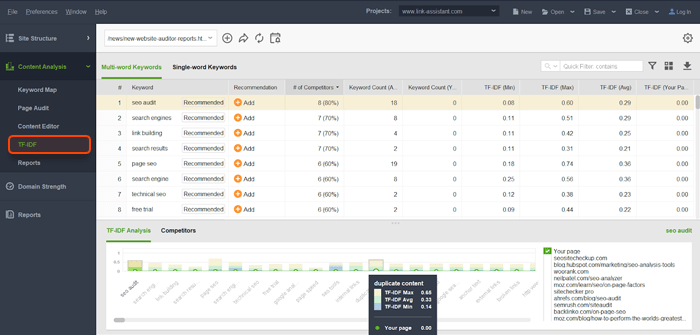

To comply with BERT and other major algorithms related to natural language, you’ve got to improve your page's scope and relevance. The best way to do it is with the help of the Content Editor and TF-IDF analysis tools that are integrated in WebSite Auditor. Certainly TF-IDF is a bit old language model, I mean it works differently from BERT. However, it can be a good clue for your optimization. It allows you to extract keywords by analyzing top 10 of your competitors and collecting keywords that they have in common.

Move to Content Analysis > TF-IDF, enter the URL to analyze in the Pages search bar in the upper left corner, and see how your keyword usage compares to competitors' on the TF-IDF chart. The tool will provide a suggestion if you should use more or less of the keyword in your text.

Launched: August 2019

Goal: Improve search results by more appropriate match with user intent, especially for businesses dealing with people’s wellbeing (health, fitness, and finance)

Google Medic update was named this way when SEOs saw what types of sites were affected by these Google algorithm changes – mainly health and fitness websites, but not only. Since it was rolled out in the first week of August, a heavy flux was noted in search rankings for business sites, as well. Google did not go much into details, saying it was just a core update, and no fix for it was needed. The most shared guess is that the change was aimed to match pages with their types of intent.

When Google rolled out this update, the affected sites had to take really hard efforts to recover. Since that point, concerns of user intent have become more relevant than ever. Just have in mind three types of queries that imply a certain intent: informational (what/where/how), investigational (compare/review/kinds of) and transactional (order/get/buy). You’ve got to dive a bit into long-tail keyword research to find out what users are looking for on your pages, and optimize them accordingly.

Use Rank Tracker’s Keyword Research module to mine your best keyword opportunities. Once you have them all collected in the Keyword Sandbox, use filters to group them according to intent and other important metrics, then add to your Keyword Map where you can map each keyword, or a group of keywords, to a certain landing page. Use tags and notes to mark which pages are transactional and which are informational.

Launched: July 9, 2018

Goal: Boosts faster mobile pages

Google began updating its search algorithm towards mobile experience long before, so what makes this one special? Google officially confirmed that, starting with speed algorithm updates, page speed became a ranking factor for mobile pages.

First, check your mobile pages for load speed. For this purpose, use the PageSpeed Insights from Google, Lighthouse, or Mobile Friendly Test. You can find the latter integrated in Website Auditor software.

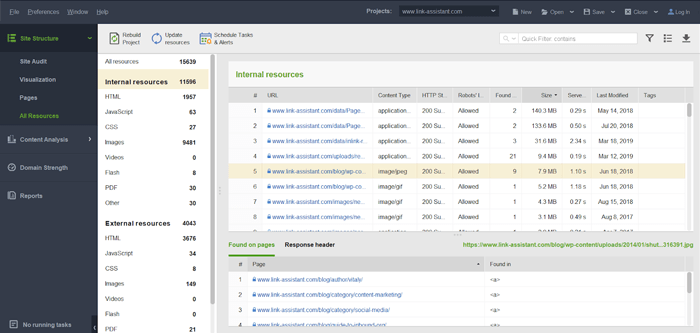

When you spot slow page issues, you can find the cause by looking deeper into your files with a Website Auditor check. Go to Pages > All Resources > Internal Resources, and filter them by Speed and Server Response Time. Here you will see what resources might need resizing, compression etc.

Besides, the latest of Google's updates concerned the Core Web Vitals, a bunch of user experience factors that are going to become part of the ranking algorithm somewhat next year. Loading speed and user interactivity is among them. So, it certainly makes sense to prepare for future Google algorithm changes by improving your site speed and user engagement.

Launched: March 8, 2017

Rollouts: —

Goal: Filter out low quality search results whose sole purpose is generating ad and affiliate revenue

Fred algorithm got its name from Google's Gary Illyes, who jokingly suggested that all updates be named "Fred". Google confirmed the update took place, but refused to discuss the specifics of it, saying simply that the sites that Fred update targets are the ones that violate Google's webmaster guidelines. However, the studies of Fred-affected sites show that the vast majority of them are content sites (mostly blogs) with low-quality articles on a wide variety of topics that appear to be created mostly for the purpose of generating ad or affiliate revenue.

1. Review Google's guidelines. This may seem a tad obvious, but reviewing the Google Webmaster guidelines and Google Search Quality Guidelines (particularly the latter) is a good first step in keeping your site safe from Fred algorithm penalties.

2. Watch out for thin content. Look: New York Times, the Guardian, and Huffington Post all show ads — literally every publisher site does. So it's not the ads that Fred algorithm targets; it's the content. Audit your site for thin content, and update the low quality, low-word-count pages with relevant, useful information.

To start the check, navigate to the Pages module in SEO PowerSuite's WebSite Auditor and look for the Word count column. Now, sort the pages by their word count by clicking on the column's header to instantly spot pages with too little content.

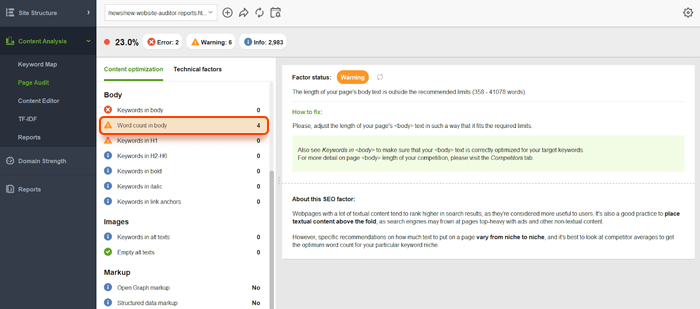

But remember: short pages can do perfectly fine for certain queries. To see if your content length is within a reasonable range for your target keywords, go to Content Analysis and select the page you'd like to analyze. Enter the keyword, and hang on a sec while Google examines your and your top ranking competitors' pages. When the analysis is complete, look at Word count in body. Click on this factor and see how long the competitors' pages are.

To see each individual competitor's content length, click on Keywords in body and switch to Competitors. Here, you'll get a list of your top 10 competitors for the keywords you specified, along with the total word count on each of these pages. This should give you a solid idea on approximately how much content the searchers are looking for when they search for your target keywords.

Launched: September 1, 2016

Rollouts: —

Goal: Deliver better, more diverse results based on the searcher's location and the business' address

The Possum update is the name for a number of recent algorithm changes in Google's local ranking search filter. After the Possum update, Google returns more varied results depending on the physical location of the searcher (the closer you are to a certain business physically, the more likely you'll see it among local results) and the phrasing of the query (even close variations now produce different results). Somewhat paradoxically, Possum also gave a boost to businesses that are outside the physical city area. (Previously, if your business wasn't physically located in the city you targeted, the search engine hardly ever included it into the local pack, it was hardly ever included into the local pack; now this isn't the case anymore.) Additionally, businesses that share an address with another business of a similar kind may now be deranked in the search results.

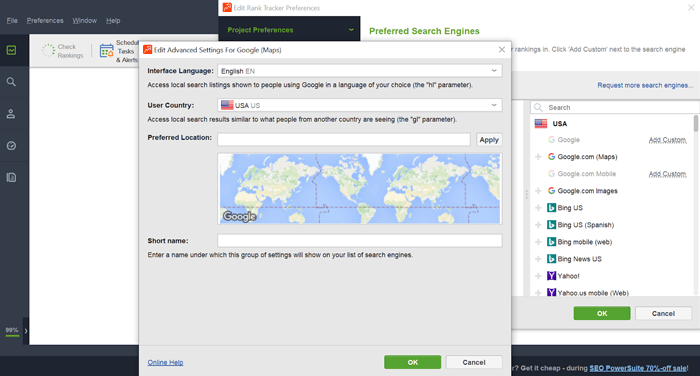

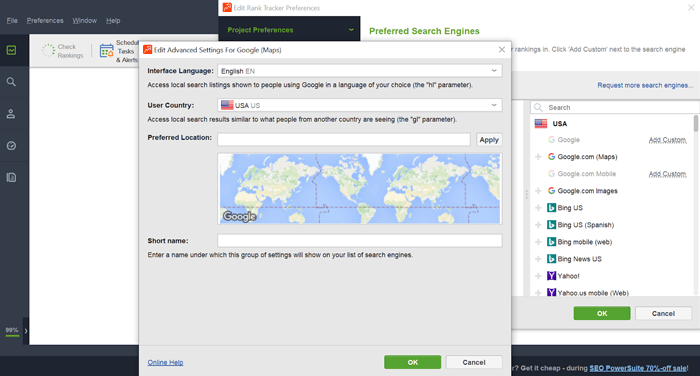

1. Do geo-specific rank tracking. After Possum, the location from which you're checking your rankings plays an even bigger part in the results you get. If you haven't done this yet, now is the time to set up a a search filter with a custom location to check positions from in SEO PowerSuite's Rank Tracker. To get started, open the tool, create a project for your site, and press Add search engines at Step 4. Next to Google (or Google Maps, if that's what you're about to track), click Add Custom. Next, specify the Preferred location (since Possum made the searcher's location so important, it's best to specify something as specific as a street address or zip code):

You can always modify the list of the local search engines you're using for rank checking in Preferences > Preferred Search Engines.

2. Expand your list of local keywords. Since Possum resulted in greater variety among the results for similar-looking queries, it's important that you track your positions for every variation separately.

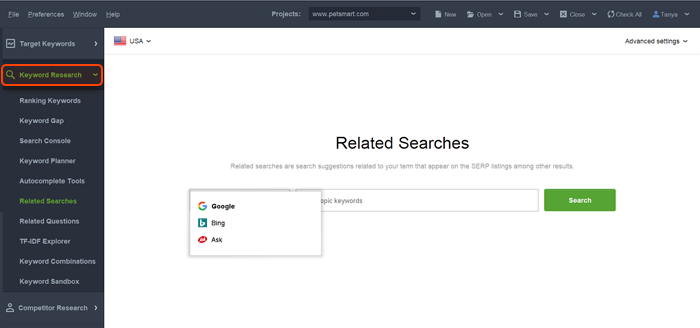

To discover those variations, open SEO PowerSuite's Rank Tracker and create or open a project. Go to the Keyword Research module and click Suggest keywords. Enter the localized terms you are already tracking and hit Next. Select Google Autocomplete as your research method.

This should give you an ample list of terms that are related to the original queries you specified. You may also want to repeat the process for other methods, particularly Google Related Searches and Google Trends for even more variations.

Overall, with the Possum update, it's becoming even more important to optimize your listings specifically for local search. For a full list of local ranking factors and how-to tips, jump here.

Launched: October 26, 2015 (possibly earlier)

Rollouts: —

Goal: Deliver better search results based on relevance & machine learning

RankBrain is a machine learning system that helps Google better decipher the meaning behind queries, and serve best-matching search results in response to those queries.

While there is a query processing component in RankBrain, there also is a ranking component to it (when RankBrain was first announced, Google called it the third most important ranking factor). Presumably, RankBrain can somehow summarize what a page is about, evaluate the relevancy of search results, and teach itself to get even better at it with time.

The common understanding is that RankBrain, in part, relies on the traditional SEO factors (links, on-page optimization, etc.), but also looks at other factors that are query-specific. Then, it identifies the relevance features on the pages in the index, and arranges the results respectively in SERPs.

1. Maximize user experience. Of course, RankBrain isn't the reason to serve your visitors better. But it's a reason why not optimizing for user experience can get you down-ranked in SERPs.

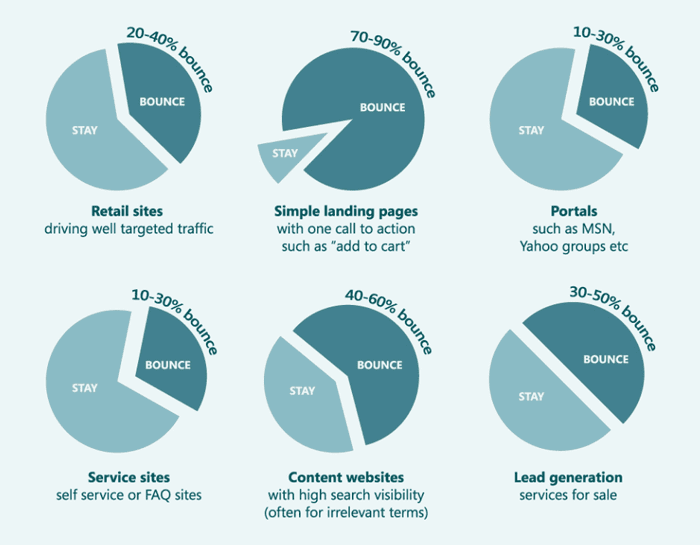

Keep an eye on your pages' user experience factors in Google Analytics, particularly Bounce Rate and Session Duration. While there are no universally right values to stick by, here are the averages across various industries reported by KissMetrics (you can find the complete infographic here.

If your bounces for some of the pages are significantly above these averages, those are the low-hanging fruit to work on. Consider A/B testing different versions of these pages to see which changes drive better results.

As for session duration, keep in mind that the average reading speed (for readers who skim) is 650 words per minute. Use this as guidance in assessing the amount of time visitors spend on your pages, and see if you can improve that by diversifying your content, such as including more images and videos. Additionally, examine the pages that have the best engagement metrics, and use takeaways in crafting your next piece of content.

2. Do competition research. One of the things RankBrain is believed to do is identify query-specific relevance features of web pages, and use those features as signals for ranking pages in SERPs. Such features can be literally anything on the page that can have a positive effect on user experience. To give you an example, pages with more content and more interactive elements may be more successful.

While there is no universal list of such features, you can get some clues of what they may be by analyzing the common traits of your top ranking competitors. Start SEO PowerSuite's Rank Tracker and go to Preferences > Competitors. Click Suggest, and enter your target keywords (you can — and should — make the list long, but make sure you only enter the terms that belong to one topic at a time). Rank Tracker will now look up all the terms you entered and come up with 30 sites that rank in Google's top 30 most often. When the search is complete, choose up to 10 of those to add to your project, examine their pages in-depth, and look for relevance features you may want to incorporate on your site.

Launched: April 21, 2015

Rollouts: —

Goal: Give mobile friendly pages a ranking boost in mobile SERPs, and de-rank pages that aren't optimized for mobile

Google's Mobile Friendly Update (aka Mobilegeddon) was meant to ensure that pages optimized for mobile devices rank at the top of mobile search, and subsequently, down-rank pages that were not mobile friendly. Desktop searches have not been affected by the update.

The Mobile-Friendly Update applied at page-level, meaning that one page of your site can be deemed mobile friendly and up-ranked, while the rest might fail the test. The Mobile-Friendly Update was one in a row of ranking algorithms that worked together in a bunch, and if a certain page ranked high thanks to its great content and user engagement, the Mobile-Friendly Update did not affect it a lot.

1. Go mobile, cap. There are a few mobile website configurations to choose from, but Google's recommendation is responsive design. Google also has specific mobile how-tos for various website platforms to make going mobile easier for webmasters.

The Mobile-Friendly Update became the first in the series of Google algorithm changes towards mobile-first indexing. Starting from July 1, 2019, for all new sites, the search engine sends its mobilebot forward, so if you are launching a new site, be ready to meet it with mobile-friendly pages.

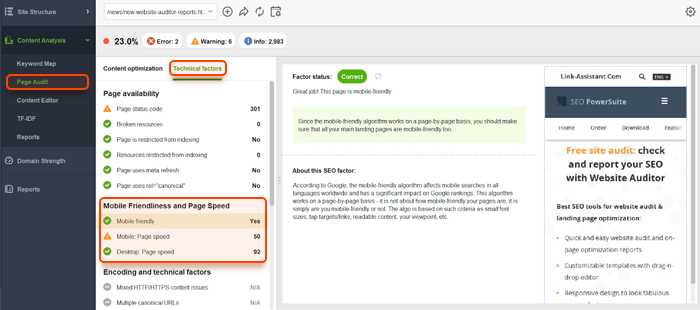

2. Take the mobile friendly test. Going mobile isn't all it takes — you must also pass Google's mobile friendliness criteria to get up-ranked in mobile SERPs. Google's mobile test is integrated into SEO PowerSuite's WebSite Auditor, so you can check your pages' mobile friendliness quickly.

Launch WebSite Auditor and open your project. Go to Content Analysis and click Add page to pick a page to be analyzed. Enter your target keywords and give the tool a moment to run a quick page audit. When the audit is complete, switch to Technical factors on the list of SEO factors on the left, and scroll down to the Page usability (Mobile) section.

The Mobile friendly factor will show you whether or not your page is considered mobile friendly overall; here, you also get a mobile preview of your page. The factors below will indicate whether your page meets all of Google's mobile friendliness criteria. Click on any factor with an Error or Warning status for specific how-to fix recommendations.

Launched: July 24, 2014 (US)

Rollouts: December 22, 2014 (UK, Canada, Australia)

Goal: Provide high quality, relevant local search results

Google Pigeon (initially tried on English only) dramatically altered the results Google returns for queries in which the searcher's location plays a part. According to Google, Pigeon created closer ties between the local algorithm and the core search algorithm, meaning that the same SEO factors are now being used to rank local and non-local Google results. This update also uses location and distance as a key factor in ranking the results.

Pigeon led to a significant (at least 50%) decline in the number of queries local packs are returned for, gave a ranking boost to local directory sites, and connected Google Web search and Google Map search in a more cohesive way.

1. Optimize your pages properly. Pigeon brought in the same SEO criteria for local listings as for all other Google search results. That means local businesses now need to invest a lot of effort into on-page optimization. A good starting point is running an on-page analysis with SEO PowerSuite's WebSite Auditor. The tool's Content Analysis dashboard will give you a good idea about which aspects of on-page optimization you need to focus on (look for the factors with the Warning or Error statuses). Whenever you feel like you could use some inspo, switch to the Competitors tab to see how your top ranking competitors are handling any given part of on-page SEO.

For a comprehensive guide to on-page optimization, check out the on-page section of SEO Workflow.

2. Set up a Google My Business page. Creating a Google My Business page for your local biz is the first step to being included in Google's local index. Your second step will be to verify your ownership of the listing; typically, this involves receiving a letter from Google with a pin number which you must enter to complete verification.

As you set up the page, make sure you categorize your business correctly — otherwise, your listing will not be displayed for relevant queries. Remember to use your local area code in the phone number; the area code should match the code traditionally associated with your location. The number of positive reviews can also have an influence on local search rankings, so you should encourage happy customers to review your place.

3. Make sure your NAP is consistent across your local listings. The Google search engine will be looking at the website you've linked to from your Google My Business page and cross-reference the name, address and phone number of your business. If all elements match, you're good to go.

If your business is also featured in local directories of any kind, make sure the business name, address, and phone number are also consistent across these listings. Different addresses listed for your business on Yelp and TripAdvisor, for instance, may put your local rankings to nowhere.

4. Get featured in relevant local directories. Local directories Yelp, TripAdvisor and the like, have seen a major ranking boost after Pigeon update. So while it may be harder for your site to rank within the top results now, it's a good idea to make sure you are featured in the business directories that will likely rank high. You can easily find quality directories and reach out to webmasters to request a feature with SEO PowerSuite's link building tool, LinkAssistant.

Launch LinkAssistant and open or create a project for your site. Click Look for prospects in the top left corner and pick Directories as your research method.

Enter your keywords — just as a hint, specify category keywords plus your location (e.g. "dentist Denver") — and give the tool a sec to find the relevant directories in your niche.

In a minute, you'll see a list of directories along with the webmasters' contact email addresses. Now, pick one of the directories you'd like to be included in, right-click it, and hit Send email to selected partner. Set up your email prefs, compose the message (or pick a ready-made email template), and send it off!

Launched: August 22, 2013

Rollouts: —

Goal: Produce more relevant search results by better understanding the meaning behind queries

Google Hummingbird is a major algorithm change that deals with interpreting search queries, (particularly longer, conversational searches) and providing search results that match searcher intent, rather than individual keywords within the query. The name of the Google algorithm was derived from comparing it to the accuracy and speed of the hummingbird.

While keywords within the query continue to be important, Hummingbird adds more strength to the meaning behind the query as a whole. The use of synonyms has also been optimized with Hummingbird; instead of listing results with the exact keyword match, the Google algorithm shows more theme-related results in the SERPs that do not necessarily have the keywords from the query in their content.

1. Expand your keyword research. With Hummingbird, you need to focus on related searches, synonyms and co-occurring terms to diversify your content, instead of relying solely on short-tail terms you'd get from Google Ads. Great sources of Hummingbird-friendly keyword ideas are Google Related searches, Google Autocomplete, and Google Trends. You'll find all of them incorporated into SEO PowerSuite's Rank Tracker.

To start expanding your list of target keywords, open Rank Tracker and create or open a project. Enter the seed terms to base your research upon, and hit to search. In the next step, go to the Keyword Research module and check your ranking keywords first. Then, one by one, explore your potential keywords for ranking with all available keyword research methods. For example, select the Relates Searches method, add your topic keywords and hit Search.

Hang on while Rank Tracker is pulling suggestions for you, and when it's done all the keyword ideas will be in the Sandbox, from where you can pick those that deserve to be added to your rank tracking list. Then go through the process again, this time selecting Google Autocomplete Tools as your research method. Do the same for Related questions. Next, proceed with analyzing the keywords' efficiency and difficulty, and pick the top terms to map them to landing pages.

2. Discover the language your audience uses. It's only logical that your website's copy should be speaking the same language as your audience, and Hummingbird is yet another reason to step up the semantic game. A great way to do this is by utilizing a social media listening tool (like Awario) to explore the mentions of your keywords (your brand name, competitors, industry terms, etc.) and see how your audience is talking about those things across social media and the Web at large.

3. Ditch exact-match, think concepts. Unnatural phrasing, especially in titles and meta descriptions, is still popular among websites, but with search engines' growing ability to process natural language, it can become a problem. If you are still using robot-like language on your pages for whatever reason, with the Hummingbird update (or, to be honest, four years before) is the time to stop.

Including keywords in your title and description still matters; but it's just as important that you sound like a human. As a nice side effect, improving your title and meta description is sure to increase the clicks your Google listing gets.

To play around with your titles and meta descriptions, use SEO PowerSuite's WebSite Auditor. Run the tool, create or open a project, and navigate to the Pages module. Go through your pages' titles and meta descriptions and spot the ones that look like they were created purely for search engine bots. When you spot a title you'd like to correct, right-click the page and hit Analyze page content. The tool will ask you to enter the keywords that you want your page to be optimized for. When the analysis is complete, go to Content Editor, switch to the Title & Meta tags tab, and rewrite your title and/or meta description. Right below, you'll see a preview of your Google snippet.

Launched: Aug 2012

Rollouts: Oct 2014

Goal: De-rank sites with copyright infringement reports

Google's Pirate Update was designed to prevent sites that have received numerous copyright infringement reports from ranking well in Google search. The majority of sites affected are relatively big and well-known websites that made pirated content (such as movies, music, or books) available to visitors for free, particularly torrent sites. Despite this effort, some of the best torrent downloaders continue to be popular among users. That said, it still isn't in Google's power to follow through with the numerous new sites with pirated content that emerge literally every day.

Don't distribute anyone's content without the copyright owner's permission. Really, that's it.

Launched: April 24, 2012

Rollouts: May 25, 2012; Oct 5, 2012; May 22, 2013; Oct 4, 2013; Oct 17, 2014; September 27, 2016; October 6, 2016; real-time since

Goal: De-rank sites with spammy, manipulative link profiles

Google Penguin update aimed to identify and down-rank sites with unnatural link profiles, deemed to be spamming the search results by using manipulative link tactics. Google rolled out Penguin updates once or twice a year until 2016, when Penguin became part of Google's core ranking algorithm. Now it operates in real time, which means that the algorithm penalties are applied faster, and recovery also takes less time.

1. Monitor link profile growth. Google isn't likely to penalize a site for one or two spammy links, but a sudden influx of toxic backlinks could be a problem. To avoid getting penalized by Google Penguin updates, look out for any unusual spikes in your link profile, and always look into the new links you acquire. By creating a project for your site in SEO PowerSuite's SEO SpyGlass, you'll instantly see progress graphs for both the number of links in your profile, and the number of referring domains. An unusual spike in either of those graphs is a reason enough to look into the links that your site suddenly gained in order to secure it from a Google penalty.

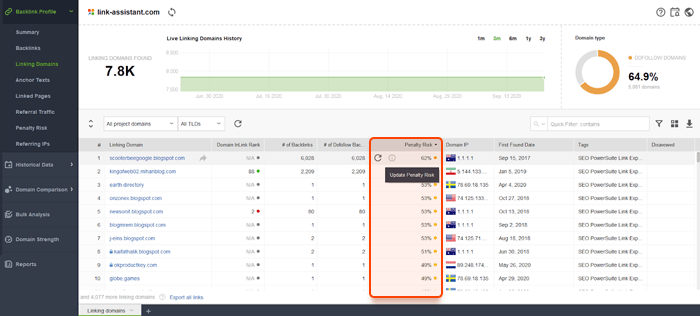

2. Check for penalty risks. The stats that Penguin update likely looks at are incorporated into SEO SpyGlass and its Penalty Risk formula, so instead of looking at each individual factor separately, you can weigh them as a whole, pretty much like the Google algorithm does.

In your SEO SpyGlass project, switch to the Linking Domains dashboard and navigate to the Link Penalty Risks tab. Select all domains on the list, and click Update Link Penalty Risk. Give SEO SpyGlass a minute to evaluate all kinds of quality stats for each one of the domains. When the check is complete, examine the Penalty Risk column, and make sure to manually look into every domain with a Penalty Risk value over 50%.

If you use SEO SpyGlass' free version, you'll get to analyze up to 1,000 links; if you're looking to audit more links, you'll need a Professional or Enterprise license.

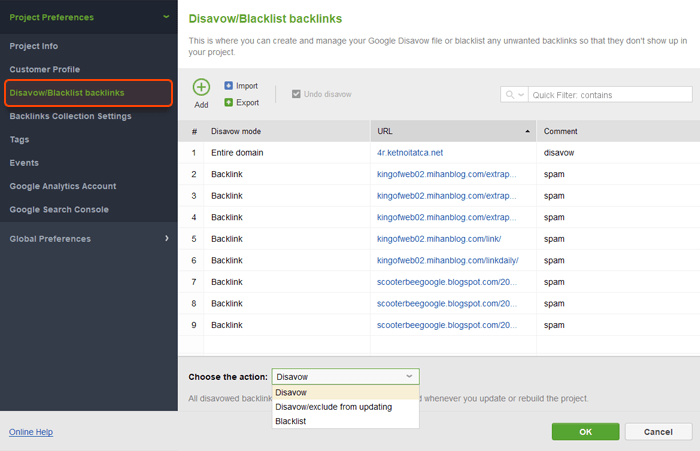

3. Get rid of harmful links. Ideally, you should try to request removal of the spammy links in your Google profile by contacting the webmasters of the linking sites. But if you have a lot of harmful links to get rid of, or if you don't hear back from the webmasters, the only remedy you have is to disavow the low-quality links using Google's Disavow tool. This way, you'll be telling Google spider to ignore those links when evaluating your link profile. Disavow files can be tricky in terms of syntax and encoding, but SEO SpyGlass can automatically generate them for you in the right format.

In your SEO SpyGlass project, select the links you're about to disavow, right-click the selection, and hit Disavow backlinks. Select the disavow mode for your links (as a rule of thumb, you'd want to disavow entire domains rather than individual URLs). Once you've done that for all harmful links in your project, go to Preferences > Blacklist/Disavow backlinks, review your list, and hit Export to save the file to your hard drive. Finally, upload the disavow file you just created to Google's Disavow tool.

Since it’s become a core ranking algorithm, one cannot say for sure that a Penguin update happens on this or that day. If you notice that your site authority or visibility has dropped, it can be a signal that the site has been penalized by Penguin. Likewise, one cannot say exactly when the full recovery takes place after all harmful links are removed. Positive effects should take place yet with the next Google spider crawl. However, you cannot expect the site authority to return straight to its previous level, since it’d been bloated with artificial links. You’ll need to apply some more accurate link-building tactics.

Sometimes sites get hit by a manual Google penalty rather than a Penguin algorithm sanction. This happens when human reviewers at Google notice manipulative tactics on a site that have gone unnoticed by a Penguin update. You will receive a notice about a manual Google penalty under the Manual Actions tab in the Search Console. Your actions here follow a similar recovery path: go to remove or disavow the bad backlinks and ask Google to reconsider the manual action.

For a more detailed guide on conducting a Penguin-proof link audit, jump here.

Launched: Feb 24, 2011

Rollouts: ~monthly

Goal: De-rank sites with low-quality content

Google Panda is an algorithm used to assign a content quality score to webpages and down-rank sites with low-quality, spammy, or thin content. Initially, Panda update was a search filter rather than a part of Google's core algorithm, but in January 2016, it was officially incorporated into the ranking algorithm. While this doesn't mean that Panda is now applied to search results in real time, it does indicate that both getting filtered by and recovering from Google Panda now happens faster than before.

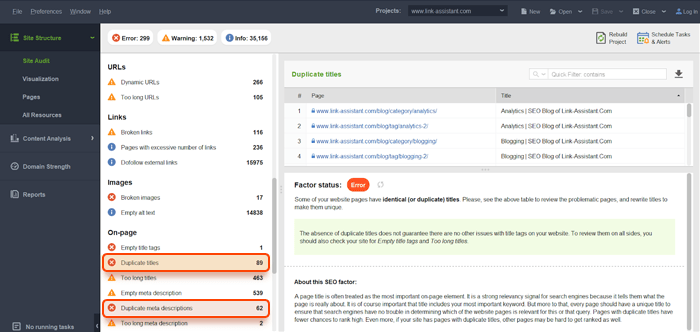

1. Check for duplicate content across your site. Internal duplicate content is one of the most common triggers for Google Panda update, so it's recommended that you run regular site audits to make sure no duplicate content issues are found. You can do it with SEO PowerSuite's Website Auditor (if you have a small site with under 500 resources, the free version should be enough; for bigger websites, you'll need a WebSite Auditor license).

To start the check for duplicate content, launch WebSite Auditor and create a project for your site. Hang in a moment until the app completes the crawl. When done, pay attention to the on-page section of SEO factors on the left, especially Duplicate titles and Duplicate meta descriptions. If any of those have an Error status, click on the problematic factor to see a full list of pages with duplicate titles/descriptions.

If for some reason you can't take down the duplicate pages penalized by Google Panda update, use a 301 redirect or canonical tag; alternatively, you can block the pages from Google spider indexing with robots.txt or the noindex meta tag.

2. Check for plagiarism. External duplicate content is another Panda trigger. If you suspect that some of your pages may be duplicated externally, it's a good idea to check them with Copyscape. Copyscape gives some of its data for free (for instance, it lets you compare two specific URLs), but for a comprehensive check, you may need a paid account.

Many industries (like online stores with thousands of product pages) cannot always have 100% unique content. If you run an e-commerce site, try to use original images where you can, and encourage user reviews to make your product descriptions stand out from the crowd.

3. Identify thin content. Thin content is a bit of a vague term, but it's generally used to describe an inadequate amount of unique content on a page. Thin pages are made up of duplicate content, scraped or generated automatically. Typically, thin content appears on pages with low word count that are filled with ads, affiliate links, etc., and provide little original value. If you feel that your site could be penalized by Google Panda update for thin content, try to measure it in terms of word count and the number of outgoing links on the page.

To check for thin content, navigate to the Pages module in your WebSite Auditor project. Locate the Word count column (if it's not there, right-click the header of any column to enter the workspace editing mode, and add the Word count column to your active columns). Next, sort the pages by their word count by clicking on the column's header to instantly spot the ones with very little content that risk being hit by Panda.

Next, switch to the Links tab and find the External links column, showing the number of outgoing external links on the page. You can sort your pages by this column as well by clicking on its header. You may also want to add the Word count column to this workspace to see the correlation between outgoing links and word count on each of your pages. Watch out for pages with little content and a substantial number of outgoing links.

Mind that a "desirable" word count on any page is tied to the purpose of the page and the keywords that page is targeting. E.g. for queries that imply the searcher is looking for quick information ("what's the capital of Nigeria", "gas stations in Las Vegas"), pages with a hundred words of content can do exceptionally well on Google. The same goes for searchers looking for videos or pictures. But if those are not the queries you're targeting, too many thin content pages (<250 words) may get you in trouble.

As for outgoing links, Google recommends keeping the total number of links on every page under 100 as a rule of thumb. So if you spot a page with under 250 words of content and over 100 links, that's a pretty solid indicator of a thin content page.

4. Audit your site for keyword stuffing. Keyword stuffing is a term used to describe over-optimization of a given page element for a keyword. When all optimization efforts fail and your pages do not reach those desired top 10, it can be indicative of keyword stuffing issues and Google Panda update sanctions. To figure out if this is the case, look at your top ranking competitors' pages (that's exactly what SEO PowerSuite's WebSite Auditor uses in its Keyword Stuffing formula, in addition to the general SEO best practices).

In your WebSite Auditor project, go to Content Analysis, and add the page you'd like to analyze for Panda-related issues. Enter the keywords you're targeting with this page, and let the tool run a quick audit. When the audit is complete, pay attention to Keywords in title, Keywords in meta description, Keywords in body, and Keywords in H1. Click through these factors one by one, and have a look at the Keyword stuffing column. You'll see a Yes value here if you're overusing your keywords in any of these page elements. To see how your top competitors are using keywords, switch to the Competitors tab.

5. Fix the problems you find. Once you've identified the Panda-prone vulnerabilities, try to fix them as soon as you can to prevent being hit by the next Panda algorithm iteration (or to recover quickly if you've been penalized). For a small number of pages with duplicate content, use Website Auditor’s Content Editor to edit those pages right inside the module and see how the quality factor improves on the go. Go to Content Analysis > Content Editor and edit your content in either a WYSIWYG editor or HTML. Here you can play around with your titles and meta description in a user-friendly editor with a Google snippet preview. On the left, the on-page factors will recalculate as you type. Once you've made the necessary changes, hit the Download PDF button or the down-error to export the document as an HTML file to your hard drive.

If you're looking for more detailed instructions, jump to this 6-step guide conducting a content audit for Panda update issues.

Those are the major Google algorithm updates to date, along with some quick auditing and prevention tips to help your site stay afloat (and, with any luck, keep growing) in Google search.

I skipped some well-known Google algorithm updates that affected rankings one-time, and hardly any recovery could be invented. For example, in 2012, Google rolled out a filter to downgrade exact-match domains without high-quality content. Such domains proliferated for a short time when exact match for keywords was enough to rank up, a part of manipulative ranking tactics. Nothing to say about the Intrusive Interstitials back to 2015, as the Mobile-Friendly Update has made all those mobile tricks even more complicated.

So our list of Google algorithm updates is very far from exhaustive, it rather focuses on those workings of the search engine that can be uncovered with the help of SEO tools.

As always, we're looking forward to your comments and questions below. Have any of these updates had an impact on your ranks? If so, what was the tactic that helped you recover? Please share your experience in the comments!

This Google algorithm updates cheat sheet was written years ago and is being regularly revised and updated for freshness.