214

•

8-minute read

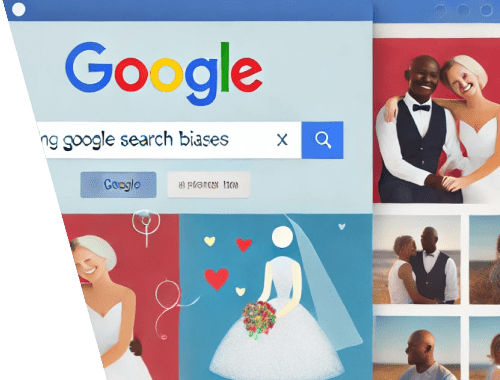

Ever noticed something strange when you search for images on Google? Say you type in "white couple" and expect a gallery of, well, white couples. But instead, you see a mix of interracial couples too. It’s not a bug or a weird conspiracy—it’s how Google’s algorithms work.

Let’s dive into what’s happening here, why some results look biased, and what it all means.

Imagine this: you search “white couple,” and instead of just showing white couples, Google throws in a mix, including interracial couples.

This sparked a lot of questions online, with users wondering if Google is intentionally being politically correct or trying to make a statement.

Google’s Danny Sullivan chimed in on the controversy to clarify things.

We don't. As it turns out, when people post images of white couples, they tend to say only "couples" & not provide a race. But when there are mixed couples, then "white" gets mentioned. Our image search depends heavily on words - so when we don't get the words, this can happen.

Turns out, it all boils down to how images are labeled. Photos of white couples are often tagged simply as “couple” without any racial descriptor. Meanwhile, interracial couples might be tagged as “white couple” because the word “white” stands out in the context. Google’s search algorithms prioritize those descriptors when delivering results in image search, which explains the mix.

When you search “happy white woman” on Google, you might expect a straightforward result—images of smiling white women.

However, the results often veer toward stock photos, showing an overly polished and stereotypical version of happiness. This can raise questions about how search engines like Google handle seemingly simple queries and whether they unintentionally reflect certain biases.

The reason for this isn’t necessarily Google's fault. The images dominating the results are often created by content producers, such as stock photo companies, who curate and label their images in specific ways.

For example, photos tagged as "happy white woman" are typically designed for marketing purposes, with the intention of portraying a commercialized version of joy that appeals to a broad audience. These patterns influence Google's algorithms, which rely heavily on metadata and tags to populate search results.

This becomes a bigger issue when we consider how these patterns can reinforce narrow portrayals of identity. A keyword like "happy white woman" may bring up an idealized, one-dimensional version of happiness, leaving out diverse and realistic representations. It’s a subtle reminder that what we see online is often shaped by cultural and commercial priorities, not just the algorithm itself.

By staying focused on how these systems work and analyzing the content they rely on, we can better understand why certain searches feel off and what it says about the data driving them.

Here’s the deal: Google doesn’t intentionally add bias to its image search results. The problem lies in how content is created and labeled online. Let’s break it down:

This isn’t just a quirky Google glitch—it’s a reminder of how technology shapes our perceptions. When search results unintentionally reinforce stereotypes or fail to meet expectations, they have real-world impacts.

For example, when searching “white couple,” if users repeatedly see a mix of interracial couples, it can challenge preconceived notions about relationships (which isn’t necessarily bad). On the other hand, stereotypical results like those for “happy white woman” can reinforce narrow societal views.

The short answer is: they’re trying. Google constantly updates its algorithms to tackle issues like this. They’re also looking into ways to better understand context and improve diversity in their results. But it’s a tricky balance—how do you fix biases without stepping on free speech or ignoring how content creators label their work?

Here’s what could help:

Google’s image search results, whether for “white couple” or “happy white woman,” aren’t deliberately racist or biased—they’re a reflection of our society and its content. The responsibility to address these biases lies not just with Google but with everyone who creates, shares, and consumes online content.

So next time you notice something off about a search result, remember: it’s not just Google’s algorithms—it’s us too.