18065

•

10-minute read

In SEO, auditing a website’s content from scratch may seem a disaster. You face a new website you’ve never seen before. You need to check each and every page to see if the content is relevant to the search queries and matches Google content quality guidelines. You need to check if there are proper keywords used. You have to spot and fix any content issues to avoid ranking drops and bring more traffic instead. The only thing is — where should you start?

In this super-detailed guide, I’ll carefully navigate you through the whole process of content audit, and you’ll see it is rather a structured system than chaos. So, here we go.

As you see the website for the first time, it’s clear that you have no idea of its structure and content. So you’ll need to collect the list of all the website’s URLs to start working on the content optimization. This can be done in many dedicated tools, but I'll proceed with WebSite Auditor.

Launch the tool and create a project for the website you’re working on. To make the tool crawl your pages the way Google would do, tick Enable expert options

and choose Googlebot in the pop-up menu Follow robots.txt instructions for:

Download WebSite AuditorThen switch to the URL Parameters section and untick Ignore URL parameters to make the tool spot dynamic URLs if there are any.

Download WebSite AuditorNow have some coffee while the tool is collecting the website’s URLs. Once the process is complete, you’ll see the full list of the pages your website has (Site Structure > Pages).

Download WebSite AuditorTo see all the pages analyzed and checked for any kind of technical and content issues, go to Site Structure > Site Audit.

Download WebSite AuditorThe tool will also create a visualized website structure, so you could easily understand what sections the website consists of and how they are interconnected (Site Audit > Visualization).

Download WebSite AuditorOnce you have the full list of pages, you can proceed with an actual website content audit.

Google will not penalize your website for duplicate content, but these issues will certainly cause crawl budget optimization problems, link juice dilution, and overall ranking drop. So the primary step of a website’s content audit is to find internal duplicate content issues and deal with them.

To spot the most evident content duplication issues, you can go to WebSite Auditor. The tool’s Site Structure > Site Audit section will help you find the problems related to:

Duplicated titles and meta descriptions

Empty titles and meta descriptions

HTTP/HTTPS issues

WWW and non-WWW issues

Dynamic URLs

If there’s an issue with any of the aspects mentioned above, the tool will report an error and the list of the affected URLs:

Download WebSite AuditorWhat you need is to visit each reported URL manually and investigate the issue closer.

Each case of content duplication should be treated according to the situation.

As for the issues related to duplicate titles and meta descriptions, it’s a common case that title duplication doesn’t mean content duplication, and the pages are actually different. In this case, go and rewrite titles and descriptions to make them fit the pages’ content. The same is true about empty titles.

HTTP/HTTPS issues and WWW/non-WW issues cause the cases of total content duplication. This happens because the older (http or/and non-www) versions of the website may still be available for some reason, for example, there are backlinks or internal links pointing to those older pages. Both issues could be fixed by simply implementing 301 redirects.

Dynamic URLs may also cause content duplication. This is the “disease” of big ecommerce websites with faceted navigation and pages available in multiple versions or reached in different ways. In this case, you can apply the rel=canonical tag to mark the main page you want Google and users to see in search.

Pages with absolutely or partially different URLs can also have the same content. In this case, you have to investigate the reason closer. For example, it could be a test version of the page that got indexed by mistake. Or a website is too big, and the owners just forgot they had a page and created a new one. Or it’s a special landing page created for some event. In most cases like these, you’ll need to set up 301 redirects so that users and search engines are able to reach the correct page.

Manual investigation may also reveal that some of your pages have nearly duplicated content — this is a common problem of big ecommerce websites that sell a multitude of similar products. In this case, you may think of broadening the pages’ content by adding, say, customer reviews.

Google will likely downrank your website if you use the content taken from other websites. Or other websites take your content — there’s no difference for Google. So your next step is to check content for external duplication issues.

To check if your content appears somewhere other than on your website, you can use different plagiarism checking tools, for example, Copyscape. Just paste the URL of the page you want to check and let the tool research the web to find any copy of your content.

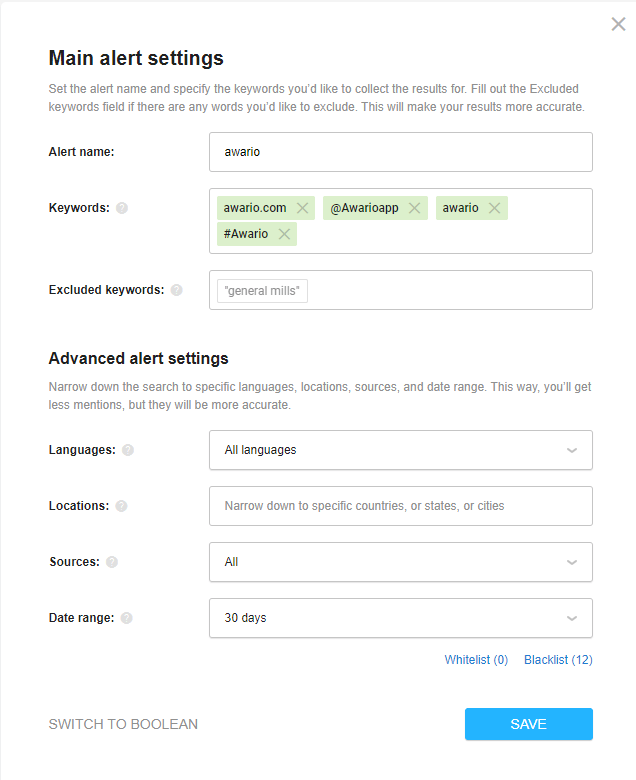

You can also monitor your content mentions across the web and on social media with the Awario app. As you create an alert for your content, make sure you add some extract from your copy, say, a sentence or two. Surround the piece with double quotes to let the app monitor the exact matches.

External content duplicates may be of two types: scraped and syndicated.

Scraped content is a piece of content that has been copied from another website without permission. If you find that any website has scraped your content, contact the webmaster and kindly ask them to remove the piece of content taken from your website. In most cases, it works, as webmasters may not even know that their copywriters have stolen your content.

Still, that ‘good way’ may not work. In this case, you can report the scraper with Google's copyright infringement report. But keep in mind that this is rather a matter of law, not SEO — so you can file an infringement report if the thief tries to pass the scraped content as the original, claiming intellectual property over the stolen copyright-protected content.

If the content scraping happens once in a while, you may leave it as it is and even benefit from it. First, take care of internal linking, as chances are that automatic scrapers will leave your internal links in place, turning them into additional backlinks. Second, you can add a self-relating rel=canonical tag pointing the page to itself so that the scraped content gets automatically canonicalized to your original piece.

Syndicated content is the content republished on some other website with the permission of the original piece’s author. In this case, all you need is to make your publishers set the rel=canonical tag on the republished piece to indicate that your website’s page is the original one.

If it turns out that it’s your copywriters who took someone else’s content, all that’s left is to rewrite the duplicated piece to make it unique.

Thin content is actually the pages that contain a few lines of text. Google uses content to determine the relevancy of a page to a query. If there’s little content, Google may consider the page a low-quality one. Moreover, Google will not be able to properly understand what the page is about and may rank it worse.

It’s clear that there are no strict guidelines on what content will be 100% considered thin — way too many things depend on the search query. Still, to automate the optimization process, I’d suggest investigating the pages that contain less than 250 words. These pages are likely to have some issues, so you need to fix them to avoid any misunderstandings with Google.

To check the number of words on a page in WebSite Auditor, go to Site Structure > Pages section and look at the Word Count column.

Download WebSite AuditorA page may have kind of okay incoming traffic but a super-low time on page and high bounce rate. This means that people click on this page but then something scares them off and they leave quickly. Your goal is to find the reason for this behavior.

In your Google Analytics account, go to the Behavior > Site Content > All Pages report and carefully look through the list of your URLs.

Manually visit the pages with low time on page and high bounce rate and investigate each issue.

The reasons why users bounce may be different — poor user experience, annoying pop-ups, thin content, misleading URLs, etc. So you have to act according to the situation. Improve UX, remove the pop-ups and other elements that prevent the page from better performance, mark the content that needs refinement, etc.

Another step in auditing your content is to make sure it covers all of your targeted keyword topics (if you don’t yet know what they are, check out this keyword research guide).

You’ll need to make sure that:

none of your keywords are left out

none of them are scattered across multiple pages, which can result in keyword cannibalization

every page on the website has its purpose

And to do that, I suggest using WebSite Auditor’s Keyword Map module.

If you’ve previously used Rank Tracker for keyword research, go to WebSite Auditor’s Page Audit > Keyword Map, and click Import from Rank Tracker.

Download WebSite AuditorIf you’ve worked with some other tool, export a CSV file with your keywords and click Import from CSV. Or just enter your keywords manually.

Now you can assign your keywords or keyword groups to the relevant pages.

Download WebSite AuditorAfter matching relevant keywords to relevant pages, check what’s left.

If any of the keywords don’t seem to fit into any of the existing pages, you’d probably need to add extra content to cover them.

On the contrary, if any of the pages are left unmatched by any of your keywords, then it’s likely they don’t fit into your current SEO strategy and you might want to:

Try to broaden the semantic core and pick extra keywords for those extra pages;

Redirect, deindex, or delete the page altogether.

Once you have all your keywords carefully assigned to relevant pages, you need to check how well each page is optimized for the chosen keywords.

If you have previously mapped all your keywords in WebSite Auditor, you can further use the tool’s Keyword Map module to check the optimization rate of your pages in bulk. To do that, select all your URLs in the Mapped pages section, right-click them, and choose Update optimization rate > Your page and competitors.

Download WebSite AuditorTo further investigate the pages with a bad optimization score, click Analyze page content.

Download WebSite AuditorThen switch to the Page Audit > Content Editor module. Here you will find some hints for content improvement based on your top SERP competitors.

Download WebSite AuditorYou can download these optimization instructions as a PDF-file right from the tool to pass them to your copywriters so they could easily start updating the pages’ content.

If your job is to run the audit “right here and right now”, at this point you will simply pass the results to anyone handling the project further on. But if the website you’re auditing is an ongoing SEO project of yours, you might want to double-check yourself in a couple of weeks.

One of the key concerns you may have is if Google understood your website structure correctly. To make things clear, I recommend taking your time to compare your actual ranking pages on Google to those you’ve planned in your keyword map.

When checking keyword rankings in Rank Tracker (Target Keywords > Rank Tracking), you have your ranking URLs listed in the Google URL Found column.

Download Rank TrackerMake sure those are the exact pages you expect. And if not, check what might have confused Google.

Is there something wrong with your website structure? Are there any issues with the instructions in the robots.txt file? And do the intended pages even have enough SEO authority to rank?

Content audit is time-consuming. So much so that it can literally take months of your time to spot all the content issues on a website, especially if it’s a big one with previously neglected SEO.

The good news is you can charge a lot of money it’s a one-time job. Once you complete your audit, the rest is making the changes you’ve planned and seeing the results.

Do you have any tricks or tools to streamline the process? Share your thoughts in the comments!