29539

•

10-minute read

Page speed has long been a ranking factor for Google. Starting with the first announcement back in 2010, and then reinforcing it with another update in 2018, and then sealing the deal with the introduction of Core Web Vitals in 2020. In this article, we are going to look at what page speed is today, how to measure it, and, most importantly, how to improve page speed scores for your website.

For a long time, Google itself has struggled with measuring page speed. What are the right metrics? Do you use field data or lab data? Do you time the entire page or just the top part? There are dozens of metrics that go into page speed and it’s been a long road to figure out which of them really matter for the user.

In the end, Google has settled on a set of three metrics that are now considered the most important for page speed: Largest Contentful Paint (LCP), First Input Delay (FID), and Cumulative Layout Shift (CLS). Known jointly as Core Web Vitals, these metrics are meant to measure the perceived page speed as opposed to the factual page speed.

Largest Contentful Paint is the time it takes for the largest element in the viewport to load completely. The benchmarks for this metric are as follows:

It is common for the largest element to be an image, so image optimization is the main contributor to this metric. On top of that, LCP depends on server response times, render-blocking code, and client-side rendering.

First Input Delay is the delay between an interactive element being painted and the moment it becomes functional. Say, a button is painted on the page, you click on it, but it’s not yet responsive. The benchmarks for this metric are as follows:

FID can be optimized with code splitting and using less JavaScript.

Cumulative Layout Shift measures whether the elements on the page move around when loading. Say, a page looks like it’s ready to use, but then a new image appears at the top and the rest of the content is pushed downward — that’s a layout shift. The benchmarks for this metric are as follows:

CLS depends on properly set size attributes and on having your resources load in a specified sequence, top to bottom.

There are plenty of tools provided by Google that offer Core Web Vitals as a part of their page audit:

One problem is that some of the tools use lab data instead of field data, while Google ranks your pages based exclusively on field data. You can tell these tools apart as they substitute field-only FID metric with the lab-measured TBT (Total Blocking Time) metric.

The other problem is that most of the tools can only evaluate one page at a time, which is not a practical approach to optimizing your entire website.

Of the Google tools listed above, the best one to use is probably Google Search Console. There, you can go to Experience > Core Web Vitals and see the report for all of your pages at once:

Under the report, there is a list of all failed metrics:

And from there you can follow the drill-down process of finding the specific issues within each metric, and then the pages affected by the issue, and after a few clicks you’ll end up in the PageSpeed Insights report for a specific page. So, although the top-level report is provided in bulk, figuring out which pages are affected by which issues could be a tiresome process.

An arguably better way to measure page speed is to use WebSite Auditor. There you can go to Site Structure > Site Audit and get a bulk page speed report for your entire website as well as view all affected pages — all from a single dashboard:

Or you can switch to Site Audit > Pages > Page Speed and view a list of pages opposite speed issues that affect them. Click on any page and you’ll also get a list of page elements that can be optimized for better performance:

Overall, I find WebSite Auditor more convenient for doing the actual work of optimizing your pages, while Google Search Console is more of a reporting tool.

Now that you have a list of affected pages, it’s time to work on improving your page speed. Below are some of the most common optimization opportunities as well as some advice on how you can exploit them.

Let’s kick it off with something simple. When you omit image dimensions from your code, it may take some time for the browser to properly size your images and videos. This means the content on your page will jump around and negatively affect your CLS score.

To avoid this issue, always set width and height properties for your images, like so:

<img src="pillow.jpg" width="640" height="360" alt="purple pillow with flower pattern" />

With this information, any browser can calculate your image dimensions and reserve enough room on the page. This should take care of most, if not all of your CLS issues.

Not all image formats are created equal. Our trusty JPEG and PNG formats now have much worse compression and quality characteristics compared to AVIF, JPEG 2000, JPEG XR, and WebP.

Of these listed formats, WebP is probably the one to consider first. It supports both lossy and lossless compression, as well as allows for transparency and animation. On top of that, WebP files are generally 25% to 35% lighter than PNGs and JPEGs of similar quality. And although in the past it was a common concern that WebP format is not supported by some browsers, recently Safari has added the support for WebP in version 14, so the total support for the format among browsers is now over 90%.

If your website is built on WordPress, you can easily create a WebP copy of your images with an image optimization plugin like Imagify. If your website is not built on a CMS platform or you don't want to install too many plugins, you can convert your images using online converters or graphics editors.

Whether you use next-gen image formats or not, compressing your images is still a valid way of reducing overall page size. Again, if your website is built on WordPress, you can compress your images in bulk with image plugins like WP Smush. You can also use online compressors if you don’t want to install too many plugins and risk slowing your website down. Last resort — use graphics editors to compress your images before uploading them to your website.

Just to give you an idea of how much you can save, I have run a quick test on a random selection of images from my download folder:

Using an online compressor, I have saved between 30% and 75% per image and 68% in total (heavier images pulled more weight and skewed the percentage).

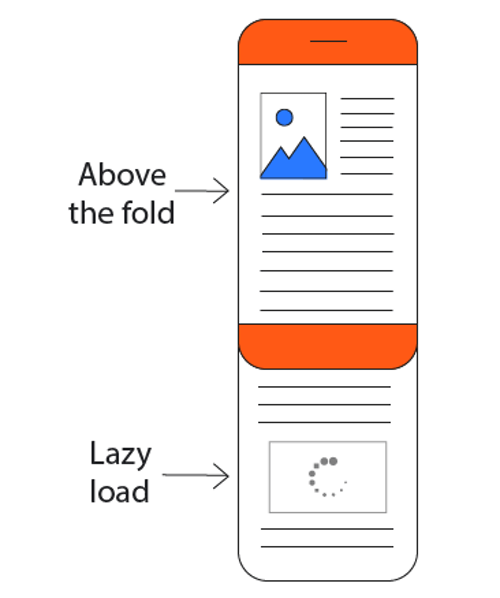

Offscreen images are the ones that appear below the fold, meaning the user wouldn't see them until he scrolls beyond the initial screen. And this will be a common theme throughout the rest of the article — loading everything that's below the fold should be postponed until above-the-fold elements are fully loaded. The above-the-fold area is what Google uses to measure your page speed, so it's where most of your optimization effort should be focused on.

The technique of dealing with offscreen images is called lazy loading. Basically, above-the-fold images are loaded first, and offscreen images are only loaded as the user scrolls down the page. Consult this guide on lazy-loading your images for more information.

It might sound counterintuitive, but gifs will often have a larger file size than videos. It makes no sense, I don't know how it came to be, but converting a large gif to a video will deliver a reduction in size of up to 500% or even more. So, if your page speed report tells you to use video formats for animated content you might as well take it seriously.

To convert gifs to videos you can use any online converter or download a tool like FFmpeg. Google actually recommends creating two video formats: WebM and mp4. WebM is similar to WebP in that it's lighter, but not yet supported by all browsers. So, when you add your video to the page, you should list the WebM version first and then the mp4 version as a backup:

<video autoplay loop muted playsinline>

<source src="animation.webm" type="video/webm">

<source src="animation.mp4" type="video/mp4">

</video>

Note that the video element also has four additional attributes: autoplay, loop, muted, and playsinline. These attributes make your video behave like a gif: it begins to play automatically, it's looped, without sound, and plays inline.

Unused CSS can slow down a browser's construction of the render tree. The thing is, a browser must walk the entire DOM tree and check what CSS rules apply to every node. Therefore, the more unused CSS there is, the more time a browser will need to calculate the styles for each node.

The goal here is to identify the pieces of CSS that are unused or non-critical and either remove them completely or change the order in which they load. Please consult this guide on deferring unused CSS.

JS and CSS files may often contain unnecessary comments, spaces, line breaks, and pieces of code. Removing them may make your files up to 50% lighter, although the average minification is much smaller. Still, it’s a marginal contribution to your page speed and it’s worth a try.

If you have a small website, you can minify the code using online minifiers, like CSS Minifier, JavaScript Minifier, and HTML Compressor. Or, if your website is built on a CMS platform, like WordPress, there are definitely some plugins that can do the work for you. For a custom-built website, please refer to this guide on minifying CSS and this one on minifying JS.

By default, CSS is a render-blocking resource. Your page is not going to be rendered until the browser fetches and parses CSS files, which can be quite time-consuming.

To solve this, you can extract only those styles that are required for the above-the-fold area of your page and add them to the <head> of your HTML document. The rest of your CSS files can be loaded asynchronously. This will significantly improve your LCP scores and make your pages appear faster to the users.

Check out this guide on extracting critical CSS for more details.

The most unpleasant thing about server response delays is that there is a wide selection of reasons that may cause them. For instance, these can be slow routing, slow application logic, resource CPU starvation, slow database queries, memory starvation, slow frameworks, etc.

An easy non-dev solution to these issues is to switch to better hosting, which in many instances means from a shared to a managed hosting. Managed hosting usually comes with CDN networks and other content delivery tricks that will positively affect page speed. But, if you want to get your hands dirty, here is a more detailed guide on fixing an overloaded server.

For those looking for a quick improvement without diving into the technical details, switching to a high-quality hosting provider can make a significant difference. SiteGround, for example, offers fast and secure hosting tailored for small and medium-sized websites and businesses. Their services include a free CDN, enhanced caching, and an optimized PHP implementation, all of which contribute to faster page loading times and a more responsive site overall.

Third-party resources, like social sharing buttons and video player embeds, tend to be very heavy on resource consumption. Moreover, whenever the browser encounters a piece of JS, it will pause executing HTML until it deals with JS. All of this tends to add to a measurable drop in page speed.

If any of your third-party resources are non-essential, i.e. they do not matter for the appearance or the function of the above-the-fold, you should move them out of the critical rendering path. To load third-party resources more efficiently, you can use either the async or the defer attribute. The async attribute is softer — it allows downloading HTML and JS simultaneously, but it will still pause HTML to execute JS. The defer attribute is harder — it will not pause HTML to execute JS, which will only be executed at the very end.

As a rule, establishing connections, especially secure ones, takes a lot of time. The thing is, it requires DNS lookups, SSL handshakes, secret key exchange, and some roundtrips to the final server that is responsible for the user's request. So in order to save this precious time, you can pre-connect to the required origins ahead of time.

To pre-connect your website to a third-party source, you only need to add a link tag to your page:

<link rel="preconnect" href="https://example.com">

After you implement the tag, your website won't need to spend additional time on establishing a connection with the required server, saving your users from waiting for several additional roundtrips.

Whenever there is a chunk of JavaScript that takes longer than 50ms to execute your page may appear unresponsive to the user. To solve this issue it is recommended to seek out these long tasks, break them up into smaller segments, and make them load asynchronously. This way there will be short windows of responsiveness built into your page loading process.

You can use Chrome DevTools to identify overly long tasks — they are the ones marked with red flags:

Once you identify long tasks on your pages, you can split them up into smaller tasks, delay their execution, or even move them off the main thread via a web worker.

It's up to browsers to decide what resources to load first. Therefore, they often attempt to load the most important resources such as CSS before scripts and images, for instance. Unfortunately, this isn't always the best way to go. By preloading resources, you can change the priority of content load in modern browsers by letting them know what you'll need later.

With the help of the <link rel="preload"> tag, you can inform the browser that a resource is needed as part of the code responsible for rendering the above-the-fold content, and make it fetch the resource as soon as possible.

Here is an example of how the tag can be used:

<link rel="preload" as="script" href="script.js" />

<link rel="preload" as="style" href="style.css" />

<link rel="preload" as="image" href="img.png" />

<link rel="preload" as="video" href="vid.webm" type="video/webm" />

<link rel="preload" href="font.woff2" as="font" type="font/woff2" crossorigin />

Please note that the resource will be loaded with the same priority. The difference is that the download will start earlier as the browser knows about the preload ahead of time. For more detailed instructions, please consult this guide on preloading critical assets.

Without browser caching, every time you visit the same page the entire page is loaded from scratch. With browser caching, some page elements are stored in the browser memory, so only a part of the page has to be loaded from the server. Naturally, the page loads much faster on repeat visits and your overall page speed scores go up.

Normally, the goal is to cache as many page resources as you can for as long as you can and to make sure updated resources get revalidated for caching. You can actually control all of these parameters with special HTTP headers that hold caching instructions. A good place to start learning about HTTP cache is this guide from Google.

A too-large DOM tree with complicated style rules can negatively affect such things as speed, runtime, and memory performance. The best practice is to have a DOM tree, which is less than 1500 nodes total, has a maximum depth of 32 nodes and no parent node with over 60 child nodes.

A very good practice is to remove the DOM nodes that you don't need anymore. To that tune, consider removing the nodes that are currently not displayed from the loaded document and try to create them only after a user scrolls down a page or hits a button.

Getting rid of all unnecessary redirects is one of the best things you can do to your site speed-wise. Every additional redirect slows down page rendering and adds one or more HTTP request-response roundtrips.

The best practice is not using redirects altogether. However, if you desperately need to use one, it's crucial to choose the right redirect type. It’s best to use a 301 redirect for permanent redirection. But if, let's say, you're willing to redirect users to some short-term promotional pages or device-specific URLs, temporary 302 redirects are the best option.

The issues listed above are not all of the issues that can possibly affect page speed, rather the most common ones and the ones with the highest improvement potential. Make sure to tailor your optimization strategies to the issues reflected in your page speed report. Keep in mind that issues that are present across many of your website’s pages can often be resolved in bulk by implementing sitewide changes.