How Does AI Detection Work? A Complete Guide to Identifying AI-Generated Content

AI detection refers to the methods and tools used to determine whether a piece of content was written by a human or generated by artificial intelligence.

With the explosion of AI writing tools and chatbots, AI content detection has become increasingly important. Why? Because businesses, educators, and readers all want to know the authenticity of content.

In fact, a recent survey found that 45% of the U.S. population is now using some form of generative AI, affecting industries from HR (e.g. AI-written resumes) to education (AI-assisted essays).

In this guide, we’ll demystify how AI detection works, why it matters, the common techniques used, limitations to be aware of, and how you can leverage it in practice.

What is AI detection?

AI detection (or AI content detection) is the process of analyzing text (or other media) to judge whether it was created by a human writer or an AI system.

In simple terms, AI detectors are tools or algorithms trained to tell the difference between human-written text and AI-generated text. They examine patterns in the writing – things like word choice, sentence structure, consistency, and complexity – that might reveal a tell-tale “AI signature”.

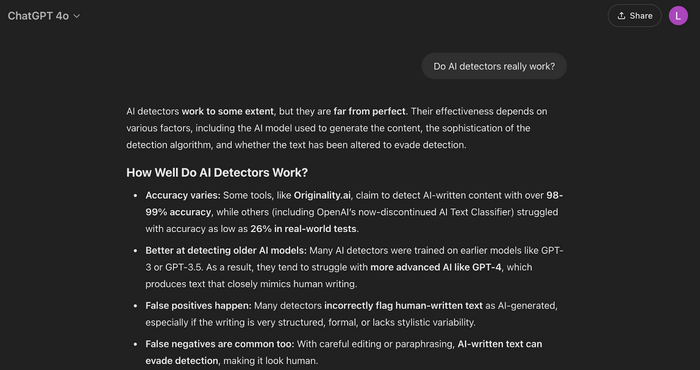

Before we jump into more detail, I thought it would be funny to ask ChatGPT about its take on AI detectors:

Anyways, as AI-generated content becomes more common, there are legitimate concerns about originality, accuracy, and honesty in content creation.

For instance, teachers might use AI detection to catch students submitting AI-written essays, and editors might use it to ensure an article has a genuine human touch.

Even search engines are believed to pay attention – there’s evidence Google uses AI content detection as part of its search quality evaluations (though Google’s official stance is that helpful content is what counts, regardless of how it’s produced). In any case, identifying AI-written content can help maintain transparency.

How does AI detection work?

How do AI detectors actually figure out who (or what) wrote the text? They use a combination of computational linguistics and machine learning. Here’s an in-depth look at the typical process:

Step 1: Text processing (tokenization)

First, the content is broken down into smaller units called tokens. Tokens can be words or even sub-word fragments.

By splitting the text, the AI detector can analyze the frequency and sequence of words more effectively.

For example, the sentence “AI detection tools are increasingly important.” might be tokenized into “ai”, “detection”, “tools”, “are”, “increasingly”, “important”. This step normalizes the text (often lowercasing words, removing extra spaces, etc.) so that analysis isn’t thrown off by simple differences in formatting.

Step 2: Feature analysis

Once tokenized, the detector examines various features of the writing. This is the core of how AI detection works. Detectors look at things like:

Predictability of text (perplexity)

How likely is each word or phrase in the text, given the words that come before it? If the text is too predictable (meaning an AI model finds the word choices very unsurprising), it might indicate AI generation. If it’s more surprising or creative, it leans human. We’ll explain the perplexity in detail shortly.

Stylistic variability (burstiness & more)

Does the writing have a mix of short and long sentences? Does it repeat certain phrases a lot in one section and then not at all later? Human writers tend to have more variation, whereas AI might maintain a steadier, more uniform style.

Repetition patterns (N-grams)

The detector checks for repeated sequences of words or phrases. AI-generated text can sometimes reuse common phrases or sentence structures, especially if it’s sticking to its training data patterns. If many of the same word sequences appear frequently, that’s a red flag for AI.

Vocabulary and complexity

Some tools analyze vocabulary richness or complexity of grammar. Is the text using an unusually consistent level of complexity (either too simple or trying too hard with complex words uniformly)? That could be AI. Human writing often has irregularities – maybe a casual phrase here, a complex sentence there – whereas AI might maintain a certain average complexity throughout.

Semantic embeddings

Advanced detectors convert the text into numerical vectors (embeddings) that capture meaning. By doing so, they can compare the semantics of the content to known AI-written text. If the vector representation of your text is very close to vectors of AI-generated examples, it might signal AI involvement.

Machine learning classification

Most modern AI detectors use machine learning models (classifiers) under the hood. These models are trained on large datasets of text that are labeled as “human-written” or “AI-generated.” During training, the model learns the differences in patterns between the two categories. Classifiers essentially sort text into “likely human” vs “likely AI” based on patterns they’ve learned.

Some detectors employ simple models that look at a handful of indicators, while others use complex neural networks. For example, a detector might have been trained on hundreds of essays written by ChatGPT (AI) and hundreds written by students (human). Given a new essay, it uses what it learned to predict which category the essay falls into.

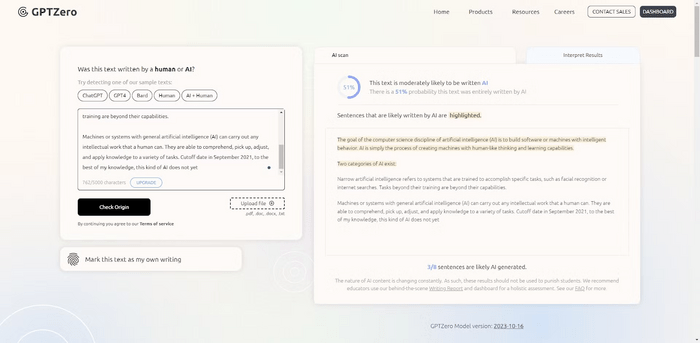

Many detectors combine multiple approaches – for instance, one popular tool GPTZero first uses fast statistical checks like perplexity, and then may apply deeper analysis using a neural network if needed.

Step 3: Probability scoring

The output of an AI detection process is often a score or probability. Rather than a simple yes/no, you’ll typically see something like “85% likely to be AI-generated” or a score out of 100. It’s important to interpret these correctly. A score of 60% AI means the tool thinks there’s a 60% chance the content was AI-generated – not that 60% of the words were written by AI and 40% by a human.

The detector is essentially saying “I’m somewhat confident this is AI-written.” Many tools also provide a confidence level for “Original” (human) content. Higher AI probability means the text shares characteristics with the AI examples it knows. Some tools have threshold-based outputs like “Human,” “AI,” or “Mixed” depending on the score range.

Step 4: Cross-checking and data comparison

Some detectors also compare the text against a repository of known AI outputs or known human texts. They might use embeddings (as mentioned) or search for exact strings.

However, outright plagiarism-style matching is less useful here (we’re usually dealing with original text, just want to know who wrote it). More often, it’s pattern matching – comparing the statistical fingerprint of the text against the fingerprints of AI vs human writing.

Step 5: Final determination

Using all the above, the detector makes its call. For instance, if a piece has very low perplexity (highly predictable text) and low burstiness (uniform style), an AI detector will likely flag it as AI-generated.

If the text has odd phrases or unpredictable word choices and varied style, it leans toward human. Many tools will highlight specific sentences as well, pointing out which parts seem AI-written (especially if a document is a mix).

Under the hood, these methods can get quite complex, but the goal is straightforward: spot the subtle clues that differentiate AI writing from human writing. It’s a bit like a forensic process for language.

In the next sections, we’ll break down some of the key components and techniques used in AI detection, so you can understand what those clues are.

Key components of AI detection

AI content detectors may vary in their algorithms, but most involve a few key components working together. Understanding these components helps you see why detectors give the results they do. Here are the main elements:

|

|

|

|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

These components work in tandem. Think of an AI detector as a combination of a linguist and a statistician: one part is deeply analyzing writing style, while another part is crunching numbers and probabilities based on training data. Together, they produce an assessment of the text’s origin.

Common AI detection techniques

AI content detectors use several clever techniques to distinguish AI text from human text. Here we break down some of the most common AI detection methods and how they work:

Perplexity analysis

Perplexity measures how predictable a piece of text is. AI-generated content tends to have low perplexity (predictable word choices), while human writing has higher perplexity (more variation and creativity).

For example, an AI would likely complete “The sky is ___” with “blue” (low perplexity), whereas a human might write “a swirling canvas of forgotten dreams” (high perplexity).

AI detectors like GPTZero analyze sentence-level perplexity to flag potential AI content. If a passage has consistently low perplexity, it may indicate AI generation. However, perplexity alone isn’t definitive—it’s combined with other metrics like burstiness (sentence variation) for more accuracy.

Burstiness Analysis

Burstiness measures variation in writing patterns. Human writing has high burstiness—mixing short and long sentences and clustering certain words, like mentioning "cake" repeatedly in a birthday story before switching topics. AI writing tends to be more uniform, with steady sentence lengths and evenly distributed words.

AI detectors analyze sentence structure and word repetition to flag low-burstiness text as AI-generated. Combined with perplexity (predictability of words), burstiness helps distinguish AI from human writing, as AI struggles to mimic natural fluctuations in style.

N-gram analysis

An n-gram is a sequence of consecutive words. AI models often repeat specific n-grams due to their predictive nature, while humans vary phrasing more naturally.

For example, if an AI-generated review repeatedly uses “This product is great because…” in multiple sections, it signals AI authorship. Detectors flag frequent n-gram repetition as a sign of machine-generated text, helping distinguish it from human writing.

Stylometry

Stylometry examines writing style to detect AI-generated text. It analyzes sentence complexity, vocabulary richness, function word usage, punctuation patterns, and idioms to determine if a text aligns more with human or AI writing.

For example, if a text frequently starts with "Furthermore," and avoids contractions, it may resemble AI-generated content. Some tools, like StyloAI, compare multiple style markers to flag AI authorship. Stylometry is effective but computationally intensive, often combined with perplexity analysis for greater accuracy.

Other techniques (classification & ensemble methods)

Aside from the above, it’s worth noting a couple of other techniques:

Machine learning classification

This isn’t a separate technique per se (it underpins many of the above), but deserves mention.

Many detectors use an AI model (like a smaller transformer network) purely to classify text as AI or human. This model might internally use some of the features we discussed, but it learns its own way during training.

Such classifiers consider dozens of subtle cues at once and output a prediction. For example, OpenAI’s now-retired AI Text Classifier used a GPT model fine-tuned to predict if text was AI-written. Other examples include detectors based on RoBERTa or other transformer architectures that directly output “AI” or “Human” probabilities after reading the text.

Watermarking detection

Some AI text might carry an invisible watermark – a deliberate pattern implanted by the AI when generating text (if the AI’s creators implemented one). There’s active research in watermarking AI outputs by subtly biasing the AI to choose certain words from a special list, creating a statistically detectable pattern.

If such watermarks are present, a detector can spot them by checking for those patterns. For instance, if a language model was programmed to prefer certain synonyms or sentence lengths as a watermark, a detection tool can test for that distribution.

However, right now most public AI models (ChatGPT, etc.) do not obviously watermark their output, or the watermarks can be defeated by minor edits.

So watermark detection is a promising technique for the future (and some company and policy initiatives are pushing for it), but it’s not yet a common reality across all content.

DetectGPT and zero-shot methods

Academic proposals like DetectGPT involve taking a given text, making small changes (perturbations) to it, and seeing how an AI language model’s probability for that text changes.

The idea is that AI-generated text sits at a spot that’s a local optimum for the AI model’s probability.

If messing with the text significantly lowers the probability, it was likely written by the AI (because the original was the model’s own fluent output).

If the probability doesn’t drop much, a human might have written it. These methods are still experimental but show the creative approaches being explored to improve AI detection.

In practice, modern AI detection tools often use a hybrid approach – combining several of the above techniques.

For example, a detector might first do quick checks (perplexity, burstiness, n-grams), and if those strongly indicate AI, it flags the content. If not, it might then run a more detailed stylometric or classification analysis to double-check. This layered strategy helps balance speed and accuracy, catching obvious AI text quickly and analyzing borderline cases more deeply.

Limitations of AI detection

AI detection is useful, but it’s far from perfect. Content marketers and the public should be aware of its limitations and not treat detector results as gospel truth. Here are some key limitations and challenges:

False positives (human text flagged as AI)

AI detectors sometimes mislabel human writing as AI-generated, leading to wrongful accusations of plagiarism. Formal, technical, or academic writing is especially prone to false positives due to its structured nature.

For example, a well-known historical speech was once flagged as 98% AI-generated due to its formal tone. Some writers have even resorted to adding mistakes to avoid detection. Since AI likelihood scores aren't absolute proof, detectors should never be the sole judge of authenticity.

False negatives (AI text passes as human)

False negatives happen when AI-generated text goes undetected, especially as models like GPT-4 become more human-like. Older detectors trained on GPT-2 or GPT-3 struggle with newer AI, reducing accuracy.

Simple tricks like paraphrasing or adding slight errors can bypass detection. Some tools even specialize in making AI text undetectable, highlighting the ongoing challenge for AI detectors to keep up.

Evolving AI Models

Generative AI is evolving at breakneck speed. A detection tool developed today might be outdated in a few months when a new AI model emerges with a different writing style.

This arms race is a fundamental limitation: detectors need constant updates and training to keep up with the latest AI models.

We saw this with the rollout of GPT-4: content that sailed through detectors previously started triggering alarms (and vice versa) because GPT-4’s style differed. As one tester observed, “Most of them [detectors] passed eight months ago, and now several failed after the release of GPT-4 models.”

New AI models can produce more varied and convincing text, reducing the efficacy of older detection methods. This means any AI detection tool has a shelf-life tied to the state of AI at the time of its last major update.

Lack of context and understanding

Current AI detectors primarily analyze text in isolation. They look at what’s on the page, but not why it was written or the broader context. They also don’t truly “understand” the content the way a human reader would; they are pattern recognizers.

This can lead to mistakes. For example, a human might tell that a particular creative story is written by a person because of its emotional subtleties or context that only a person would know. An AI detector might miss that and focus on surface metrics. Conversely, a very factual Wikipedia-style entry written by a human could be flagged as AI simply because it’s dry and encyclopedic in tone.

In essence, detectors lack common sense and intent recognition. They cannot judge the purpose or truthfulness of content – only whether the writing style and statistics match known AI patterns. Language is complex, and there’s a risk of over-relying on automated detection without a human eye on the content as well.

Detector accuracy rates

It’s important to note that no AI detector is 100% accurate. Even the best ones have an error rate. OpenAI themselves discontinued their own AI Text Classifier in 2023 because it achieved only a 26% success rate in correctly identifying AI-written text – basically not much better than a random guess for many cases.

Other studies have found that AI detection tools can be inconsistent. A study in the International Journal for Educational Integrity found that detectors were more accurate on content generated by GPT-3.5 than on GPT-4, and when it came to human-written texts, the tools showed inconsistencies and produced false positives.

Arms race and adaptation

Finally, consider the arms race nature of this field. As AI detectors improve, AI text generation adapts. We’re already seeing AI tools offering "humanizer" modes that intentionally increase perplexity or add randomness to evade detectors.

Also, if content creators know what features detectors look for, they might adjust their AI-generated content (or lightly edit it) to avoid those triggers. It’s similar to how spammers adapt to spam filters. This means AI detection might always be a step behind the best AI generators, especially if someone is actively trying to get around detection.

In high-stakes scenarios (like detecting AI-generated misinformation or academic fraud), a determined adversary could use multiple AI models, manual tweaking, or even train a custom model that doesn’t resemble the popular ones detectors know about. Such content could potentially fly under the radar. Detectors also primarily focus on English and a few major languages – AI content in less-studied languages might evade detection due to lack of training data.

Bottom line: AI detection is a helpful tool, but it has limitations in accuracy and reliability. False positives can wrongly punish honest writers, and false negatives can give a false sense of security. Always use AI detector results as guidance, not absolute proof. When in doubt, a human review and understanding of context are invaluable.

Best AI detection tools

Now that we’ve covered how AI detection works, let’s look at some of the best AI detection tools available today. If you’re a content marketer or just someone curious to check text, these are the popular tools (as of 2025) that people are using:

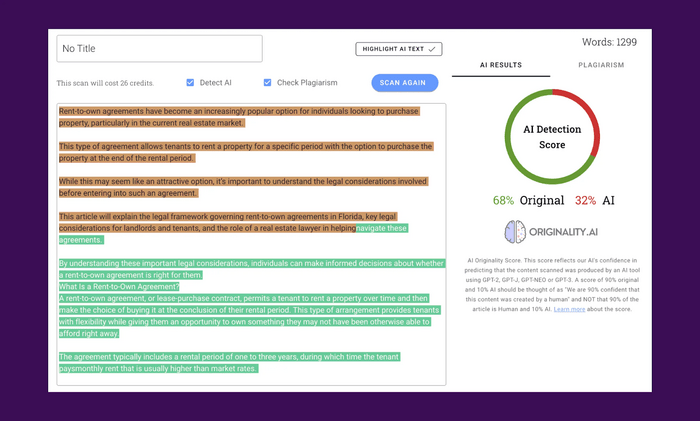

Originality.ai

A premium tool widely used by content marketers and SEO professionals, Originality.ai is known for its high accuracy, with claims of detecting GPT-4 content with 98-99% precision. It offers a user-friendly dashboard, a Chrome extension, and batch-scanning capabilities. However, it is a paid service, making it less accessible for casual users.

GPTZero

Originally designed for educators, GPTZero gained popularity for its use of perplexity and burstiness metrics to flag AI-generated text. It highlights AI-generated sentences and is free to use, making it a common choice for students and teachers. However, it sometimes mislabels formal human writing as AI-generated, leading to potential false positives.

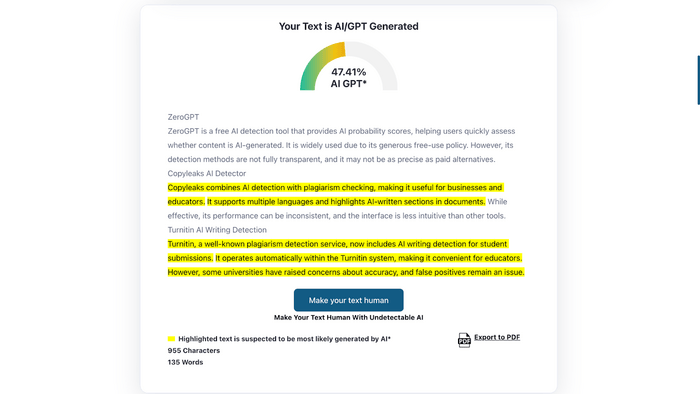

ZeroGPT

ZeroGPT is a free AI detection tool that provides AI probability scores, helping users quickly assess whether content is AI-generated. It is widely used due to its generous free-use policy. However, its detection methods are not fully transparent, and it may not be as precise as paid alternatives.

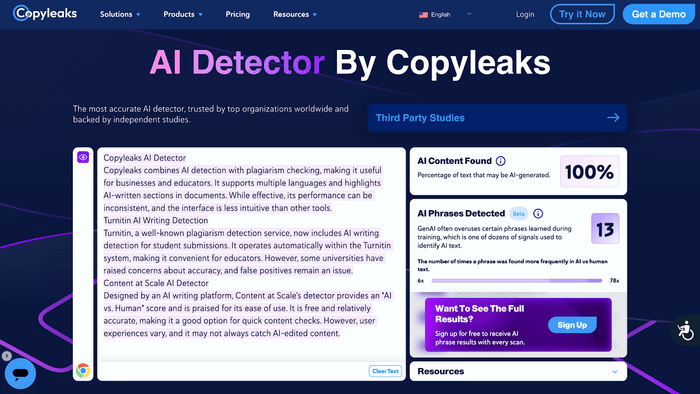

Copyleaks AI Detector

Copyleaks combines AI detection with plagiarism checking, making it useful for businesses and educators. It supports multiple languages and highlights AI-written sections in documents. While effective, its performance can be inconsistent, and the interface is less intuitive than other tools.

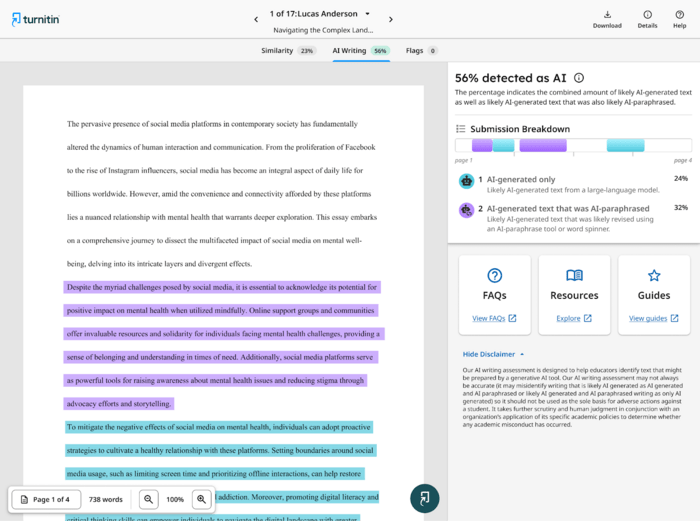

Turnitin AI Writing Detection

Turnitin, a well-known plagiarism detection service, now includes AI writing detection for student submissions. It operates automatically within the Turnitin system, making it convenient for educators. However, some universities have raised concerns about accuracy, and false positives remain an issue.

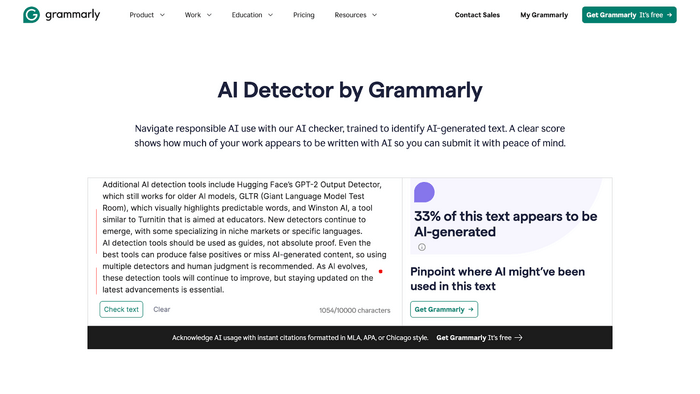

Grammarly AI Content Detector

Grammarly, best known for its writing assistance software, has introduced an AI content detector to help users identify AI-generated text. This tool is designed for professionals, educators, and businesses looking to verify content authenticity while improving writing quality.

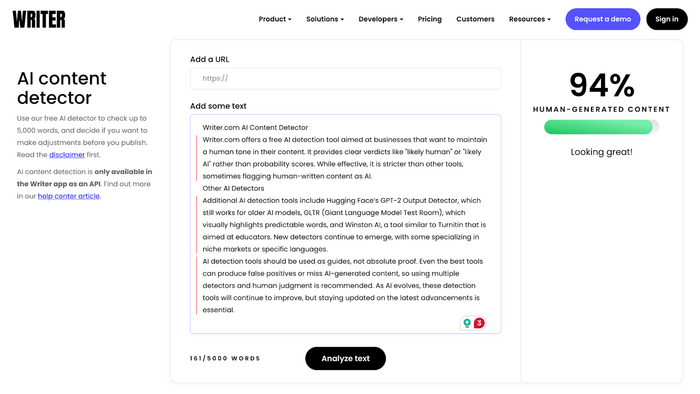

Writer.com AI Content Detector

Writer.com offers a free AI detection tool aimed at businesses that want to maintain a human tone in their content. It provides clear verdicts like "likely human" or "likely AI" rather than probability scores. While effective, it is stricter than other tools, sometimes flagging human-written content as AI.

Other AI Detectors

Additional AI detection tools include Hugging Face’s GPT-2 Output Detector, which still works for older AI models, GLTR (Giant Language Model Test Room), which visually highlights predictable words, and Winston AI, a tool similar to Turnitin that is aimed at educators. New detectors continue to emerge, with some specializing in niche markets or specific languages.

AI detection tools should be used as guides, not absolute proof. Even the best tools can produce false positives or miss AI-generated content, so using multiple detectors and human judgment is recommended. As AI evolves, these detection tools will continue to improve, but staying updated on the latest advancements is essential.

Conclusion

AI detection is evolving rapidly, using methods like perplexity, burstiness, n-gram analysis, and stylometry to distinguish human and AI-generated text. These tools help content creators, educators, and businesses maintain authenticity, but they are not foolproof—false positives and negatives remain challenges.

While AI detectors like Originality.ai and GPTZero offer valuable insights, they should be used alongside human judgment. As AI advances, detection methods will improve, incorporating watermarking and multi-layered analysis. Staying informed and combining automated tools with critical oversight is key to preserving trust in content.